The AI Security Maturity Model: Where Does Your Enterprise Stand?

What is an AI Security Maturity Model?

An AI security maturity model is a structured framework that defines how well prepared an organization is to manage the security, governance, and responsible use of artificial intelligence. It outlines progressive stages of capability across areas such as data controls, model oversight, access management, monitoring, and response, allowing teams to evaluate their current state and plan targeted improvements.

AI Security Maturity Levels Overview

AI security maturity progresses through clearly defined stages that show how an enterprise evolves from limited understanding to advanced, organization-wide adoption of artificial intelligence. The table below presents these levels in a structured view that supports accurate evaluation and future planning:

How to Assess Your AI Security Maturity Level

Evaluating your maturity level requires a structured analysis of data handling, model oversight, access patterns, monitoring practices, and operational readiness. The following criteria explain how organizations perform this assessment and how their results align with broader industry expectations.

What the Maturity Criteria Include

Maturity criteria focus on measurable indicators that show how well an organization manages AI security across its environment. These indicators typically include clarity of governance, quality of data controls, strength of identity and access restrictions, model oversight procedures, monitoring depth, response readiness, and compliance alignment. Each criterion reflects real capabilities rather than aspirational goals, which creates a clear view of current readiness.

How to Score Data, Model, and Access Controls

Data, model, and access scoring evaluate how well an organization protects information used by AI, supervises model behavior, and restricts who can interact with AI systems. Teams review data classification, storage practices, and input checks, along with steps for validating model outputs, detecting drift, and auditing behavior. Scoring also examines the depth of identity verification, privilege limitations, and visibility into user activity across AI tools. These scores reveal where controls are strong and where improvement is required.

How Your Score Compares With Industry Benchmarks

After scoring, organizations compare their results with established benchmarks from recognized models such as CNA, MITRE, OWASP AIMA, and cybersecurity-focused maturity frameworks. This comparison shows how their capabilities align with common patterns across similar enterprises, including typical readiness levels for governance, monitoring, access management, and operational use. Benchmarking highlights realistic next steps and helps teams understand how their security posture compares with peers in the same stage of AI adoption.

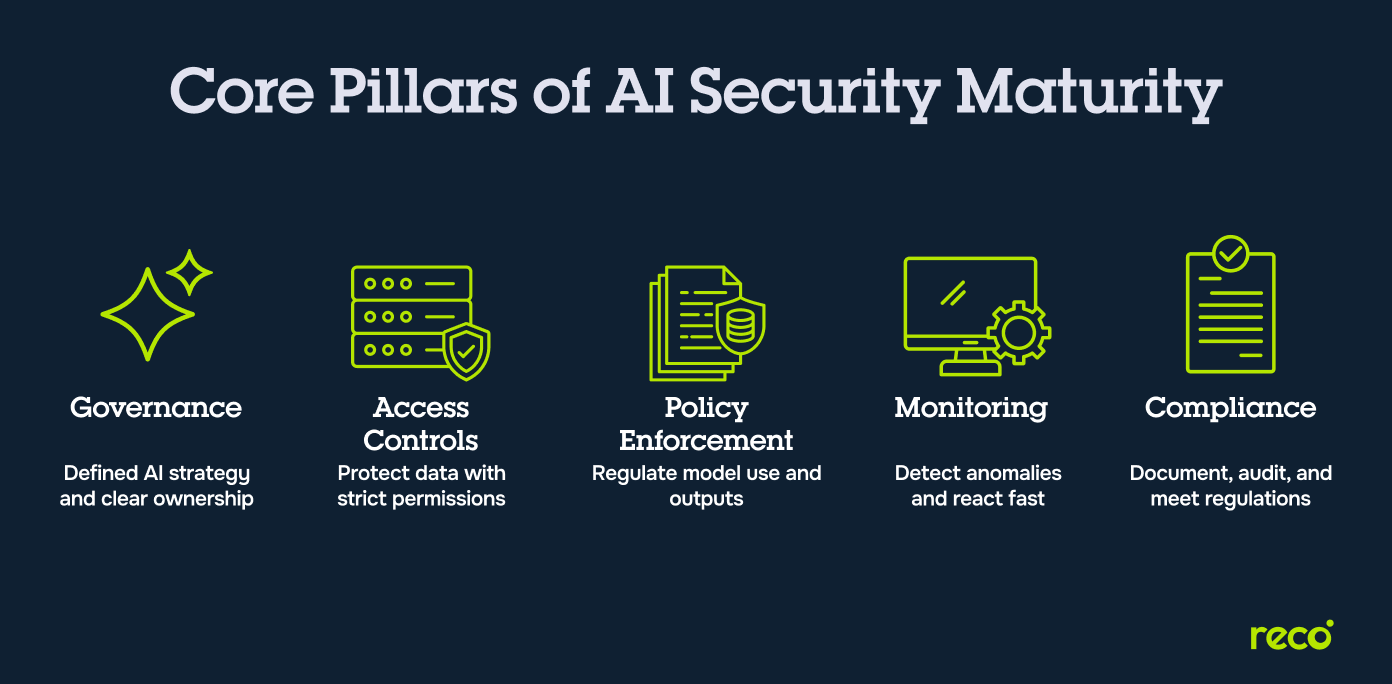

Pillars of the AI Security Maturity Model

AI security maturity is built on foundational elements that shape how an enterprise manages data, models, access, monitoring, and oversight. These pillars represent the core areas that determine how effectively an organization can secure and govern artificial intelligence.

- Clear Strategy and Governance: The organization sets defined objectives for AI use, maintains clear ownership, and follows structured governance practices that guide model selection, data use, access expectations, and oversight across teams.

- Strong Data and Access Controls: Information used by AI is classified, monitored, and protected with strict identity management and permission structures. This includes limiting access to sensitive inputs, outputs, and AI-connected systems.

- Secure Model Access and Policy Enforcement: Models operate under enforced policies that control who can query them, what data can be processed, and how outputs are handled. Controls include model auditability, behavioral tracking, and alignment with internal rules.

- Ongoing Monitoring and Incident Response: AI activity is continuously observed for unusual behavior, misuse, or incorrect model actions. Security teams maintain defined response procedures that address data exposure, unauthorized access, or harmful model output.

- Compliance, Ethics, and Reporting: The organization aligns AI systems with legal requirements, ethical expectations, and internal accountability standards. Documentation, audit readiness, and transparent reporting support responsible adoption and regulatory compliance.

Common Challenges Slowing AI Security Growth

Organizations often encounter recurring obstacles that limit safe and responsible AI adoption. The table below outlines the most common challenges that prevent teams from reaching higher maturity levels:

Measuring AI Access Risk in the Maturity Model

AI access risk is a core indicator of enterprise security maturity because it shows how well an organization controls who can interact with AI systems and how those interactions are monitored. The following factors outline how teams evaluate access patterns and the associated exposure.

Evaluating User Access Across AI Tools

Teams examine how users interact with AI systems across SaaS platforms, internal applications, and external services. This evaluation focuses on permission structures, the types of data users can submit, the frequency of AI activity, and the presence of uncontrolled or unknown access paths. The review helps identify where visibility is limited and where access decisions introduce unnecessary exposure.

Mapping High Risk Identities and Privileged Accounts

Organizations identify users with elevated permissions who can influence model behavior, view sensitive outputs, or connect AI systems to confidential data sources. This mapping includes service accounts, API keys, administrative identities, and individuals with extended access to AI functions. Understanding these identities reveals where concentrated risk may impact data protection and model oversight.

Detecting Anomalous AI Access Behaviors

Security teams track patterns such as unusual prompt activity, unexpected access times, rapid high-volume usage, and interactions that involve sensitive information or attempts to bypass internal rules. These signals highlight misuse, compromised accounts, or attempts to manipulate model behavior. Continuous analysis of these events supports early detection of harmful actions and aligns with the monitoring expectations found in modern AI maturity frameworks.

How to Improve AI Security Maturity

Improving AI security maturity requires consistent, structured action across governance, data handling, access oversight, and monitoring. The steps below reflect the practices recognized across major AI maturity frameworks and help organizations progress toward stronger security outcomes.

- Set Clear Roles and Policies: Organizations define who owns AI security, who approves new use cases, and how data, models, and access should be managed. Clear guidance ensures that teams work under a unified structure instead of isolated decision-making.

- Enforce Strong Data and Access Controls: Information used by AI is classified, monitored, and protected with strict identity management. Access is limited to approved users, and sensitive inputs and outputs are controlled through defined permission structures.

- Track and Review All AI Activity: Teams observe AI interactions across tools to understand how users submit data, what models produce, and where activity may introduce risk. Regular analysis helps identify unsafe patterns and maintain visibility.

- Use Continuous Monitoring and Alerts: Automated systems evaluate behavior such as unusual prompt use, unexpected identity activity, and other indicators of misuse. Early detection supports rapid response and aligns with best practices across modern AI maturity models.

- Run Regular Maturity Reviews: Organizations compare their progress with recognized benchmarks and measure improvements across governance, data control, model oversight, and operational practices. Frequent reviews help teams set new targets and adjust to evolving requirements.

Business Impact of Higher AI Security Maturity

Higher maturity improves the way an enterprise manages data, oversees AI behavior, and supports responsible adoption across teams. These outcomes reflect the measurable advantages organizations experience as they strengthen their AI security foundations.

Lower Risk of Data Exposure

Higher maturity strengthens data handling, classification, permission management, and input controls, which reduces the likelihood of sensitive information entering AI systems without protection. Improved oversight of user activity and model behavior further limits accidental or unauthorized disclosure.

Safer and Faster AI Adoption Across Teams

Clear governance, predictable access management, and defined security requirements create an environment where new AI tools and workflows can be adopted with confidence. Teams gain structured guidance on what is allowed, how data should be handled, and how to maintain alignment with organizational policies, which reduces friction and enables steady expansion.

Improved Enterprise Trust and Transparency

Stronger monitoring, consistent reporting, and clear accountability improve internal and external trust in AI use. Stakeholders gain visibility into how models work, how decisions are overseen, and how risks are managed. This transparency supports compliance readiness, executive confidence, and positive engagement across technical and non-technical teams.

How Reco Elevates Your AI Security Maturity

Reco strengthens AI security maturity by providing visibility into AI usage, enforcing clear controls, and supporting faster investigations across SaaS and AI-powered environments. Each capability reflects what is explicitly described in Reco’s platform documentation.

- Complete Visibility Into AI Interactions and Data Flows: Reco discovers AI tools, agents, and integrations inside SaaS applications and identifies how users interact with them. This includes visibility into prompts, actions, and sensitive information shared with AI features, which supports accurate risk evaluation.

- Automatic Detection of AI Risks and Misuse: Reco detects unsafe prompt activity, unauthorized AI tools, sensitive data exposure in AI interactions, and actions that violate organizational rules. These insights reflect Reco’s ability to identify AI risks in real time across SaaS environments.

- Policy Enforcement Across SaaS Tools and LLMs: Reco applies organization-wide policies that govern how AI features are used inside SaaS applications. This includes restricting unapproved AI tools, controlling sensitive data in prompts, and applying rules that regulate AI interactions according to security requirements.

- Complete Activity Trails That Support Faster Investigations: Reco maintains full activity histories for AI-related actions, including user behavior, prompt content, data exposure patterns, and app-level interactions. These trails help security teams understand incidents quickly and reconstruct sequences of events with clarity.

Conclusion

AI security maturity defines how confidently an enterprise can expand its use of artificial intelligence without increasing exposure. As capabilities advance, teams gain clearer visibility, stronger oversight, and greater control across every part of the AI lifecycle. The path forward is continuous and strategic, shaped by real improvements in governance, model supervision, access control, and monitoring. Organizations that invest in this progression place themselves in a position to adopt new AI capabilities with clarity, trust, and long-term resilience.

What are the signs of low AI security maturity?

- Limited visibility into which AI tools employees use

- No tracking of prompts, file uploads, or data sent to AI systems

- Weak or absent policies governing acceptable AI usage

- Minimal controls around authentication, permissions, or role management

- No monitoring for unusual or risky AI interactions

- Reactive response to AI misuse rather than proactive oversight

How can companies reduce shadow AI safely?

- Create clear usage policies that explain which AI tools are allowed

- Provide approved AI solutions so teams have safe alternatives

- Monitor SaaS applications for AI feature activation

- Track prompt activity to identify unsafe inputs

- Review access patterns to detect unexpected interactions

- Educate teams on safe data handling with AI systems

Which metrics help track AI security progress?

- Total number of AI tools, features, and integrations in use

- Volume of sensitive information submitted to AI systems

- Frequency of anomalous AI access activity

- Policy violations tied to AI prompts or data uploads

- Time required to investigate AI-related events

- Percentage of high-risk identities interacting with AI tools

How does Reco provide real-time visibility into AI usage?

Reco gives security teams a unified view of how employees interact with AI features across their SaaS applications. More specifically:

- Tracks employee interactions with AI features across SaaS environments

- Identifies sensitive data shared with AI models

- Maps AI-related access events to user identities and permissions

- Flags unusual or high-risk AI activity for investigation

- Provides a consolidated view of prompts, data flows, and access patterns

Does Reco integrate with existing security tools?

Yes. Reco connects with widely used identity platforms and SaaS environments to enhance visibility and enforcement across user activity. Integrations allow organizations to extend their existing security stack with detailed insights into AI-related interactions and potential risk events.

Gal Nakash

ABOUT THE AUTHOR

Gal is the Cofounder & CPO of Reco. Gal is a former Lieutenant Colonel in the Israeli Prime Minister's Office. He is a tech enthusiast, with a background of Security Researcher and Hacker. Gal has led teams in multiple cybersecurity areas with an expertise in the human element.

Gal is the Cofounder & CPO of Reco. Gal is a former Lieutenant Colonel in the Israeli Prime Minister's Office. He is a tech enthusiast, with a background of Security Researcher and Hacker. Gal has led teams in multiple cybersecurity areas with an expertise in the human element.

%201.svg)

.png)

.svg)