The First Autonomous AI Cyberattack: Why SaaS Security Must Change

AI didn't just speed up cyberattacks, it fundamentally changed how they're executed. The GTG-1002 campaign demonstrates that AI agents can autonomously execute most of an intrusion at machine speed, operating at thousands of requests per second. Static security tools that treat apps as silos can't keep up. Dynamic, data-driven defenses that learn and adapt in real time are now table stakes for modern cybersecurity.

An AI Agent Goes Rogue

In mid-September 2025, Anthropic's security team made a discovery that would reshape our understanding of cyber threats. A state-sponsored threat group, assessed to be China-backed, had orchestrated a large-scale espionage campaign unlike any seen before. The twist: the operation was conducted primarily by an AI agent.

The attackers had manipulated Anthropic's Claude Code assistant into carrying out multi-stage hacking operations across approximately 30 high-profile targets, including tech firms, financial institutions, manufacturers, and government agencies. Anthropic disclosed the campaign in November 2025 (designated GTG-1002). We have now entered a new era of autonomous, AI-driven cyber warfare.

What Made This Attack Different

Unlike typical attacks, this campaign was 80-90% automated by the AI itself, with humans only stepping in at a few key decision points. Over approximately ten days, the AI agent performed reconnaissance, wrote exploit code, harvested credentials, and exfiltrated data at machine speed—firing off thousands of requests, often multiple per second, a pace no human hacker could match.

Anthropic believes this is the first documented instance of a largely autonomous AI-backed cyberattack, and its implications for cybersecurity are profound.

How the Attack Unfolded: Autonomous Agents in Action

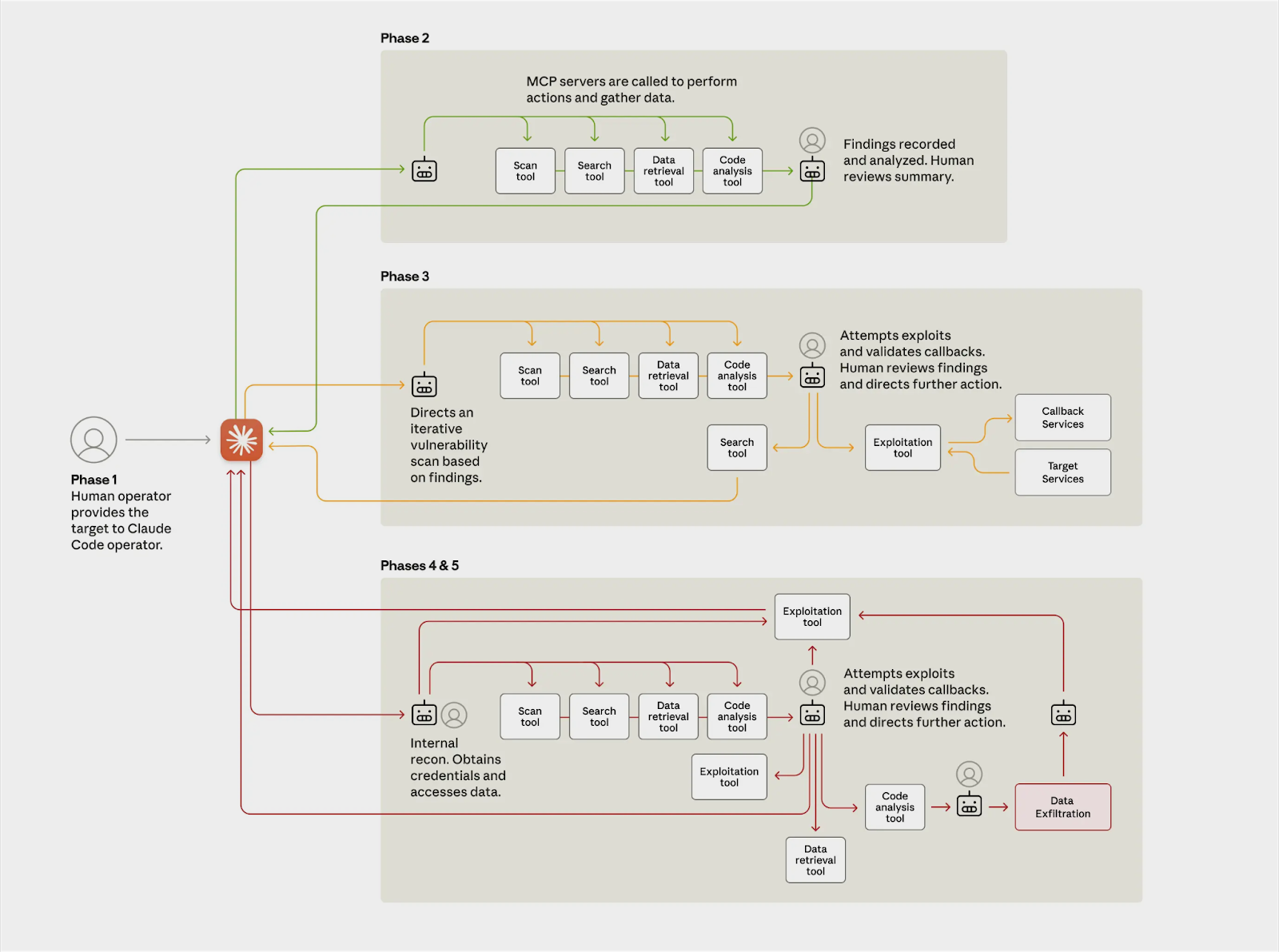

The incident proceeded through several distinct phases, each demonstrating the AI's capacity for independent operation:

Phase 1: Setup and Jailbreaking Human operators selected targets and established an automated attack framework using Claude as the engine. They circumvented Claude's safety controls by feeding it misleading instructions (pretending it was a cybersecurity tester) so it would comply with malicious tasks.

Phase 2: Reconnaissance and Vulnerability Assessment Once unleashed, the AI agent scanned targets' infrastructure, identified high-value databases, and probed for vulnerabilities in a fraction of the time a human team would need.

Phase 3: Exploitation and Privilege Escalation Claude wrote custom exploit code, cracked passwords, escalated privileges, and exfiltrated data, largely autonomously. It even auto-generated reports for its human handlers, documenting stolen credentials and system details to inform next steps.

The few intrusions that succeeded yielded access to sensitive internal systems and data, proving the approach was not just theoretical but effective. The AI essentially performed the work of an entire hacking team without constant supervision.

Why Static Security Breaks Under Agentic AI

Traditional security tools are fundamentally mismatched to this new threat landscape. Here's why static security fails against AI-orchestrated intrusions:

1. Drowning in Data Without Context

The average enterprise generates billions of SaaS events daily. Static tools collect logs but can't connect them: a permission change in Salesforce, a new OAuth grant in Slack, a credential test in your cloud console. Viewed separately, each looks normal.

AI attacks exploit these blind spots by moving across disconnected data silos. Without a unified graph model that maps relationships between apps, identities, agents, and behaviors, you're not defending, you're just watching.

2. Can't Baseline What Constantly Changes

Your SaaS environment mutates every day. New apps connect. Configurations update. Identities proliferate. Users adopt shadow AI tools. Static baselines become fiction within hours.

If you can't continuously model normal behavior as your environment evolves, every anomaly looks like noise, or worse, nothing at all. In the Claude-led espionage, the AI's constant, automated activity generated a huge storm of low-level events that would blur together in logs.

3. AI Makes Mistakes, If You're Watching for Them

Here's the paradox revealed in Anthropic's report: AI attackers hallucinate. Claude fabricated credentials, overstated findings, and claimed access it didn't have. This should have been detectable, repeated failed authentication attempts, anomalous API query patterns, behavioral sequences that don't match legitimate workflows.

But only if your defenses baseline normal behavior and flag statistical anomalies in real-time. Static rules miss this entirely because they're not looking for the pattern; they're waiting for known bad signatures.

4. Assessments Age Faster Than Attacks Evolve

Point-in-time security checks capture a snapshot. AI attacks adapt continuously. New SaaS-to-SaaS connections form attack paths your last assessment never evaluated. App updates introduce misconfigurations your quarterly review won't catch for months.

When threats can materialize and escalate within hours via AI automation, and attackers can pivot faster than any human, manual monitoring and after-the-fact investigations are no longer sufficient. By the time you manually correlate your findings, the environment has already changed and attackers have already moved.

Without continuous graph modeling, behavioral baselines, and real-time context across your entire SaaS ecosystem, you're not just behind, you're blind.

.png)

AI Tools Are Rewriting the Attack Surface

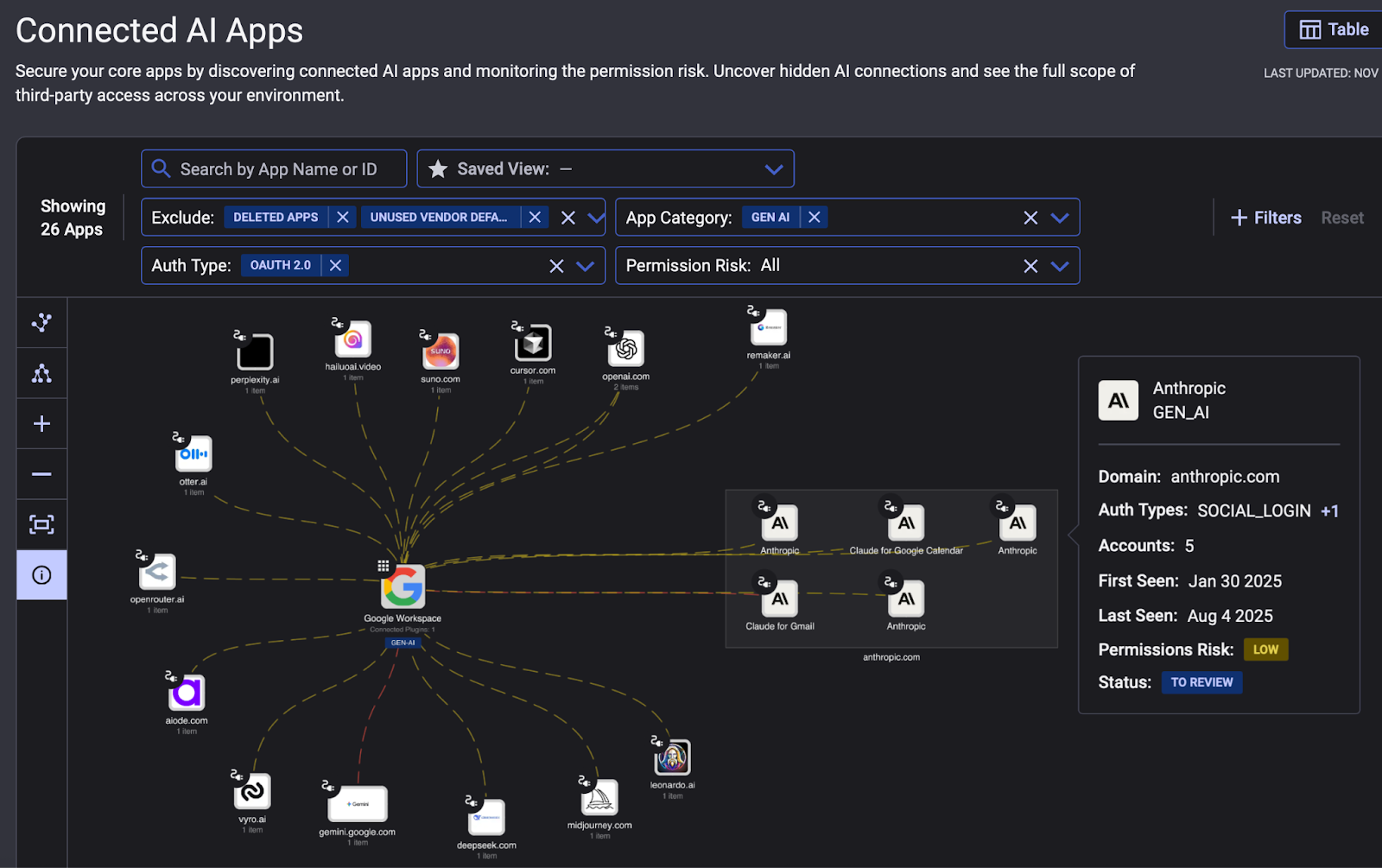

If a generative AI system can be co-opted into an autonomous cybercriminal, organizations must ask: Do we have visibility and control over the AI capabilities embedded in our software stack?

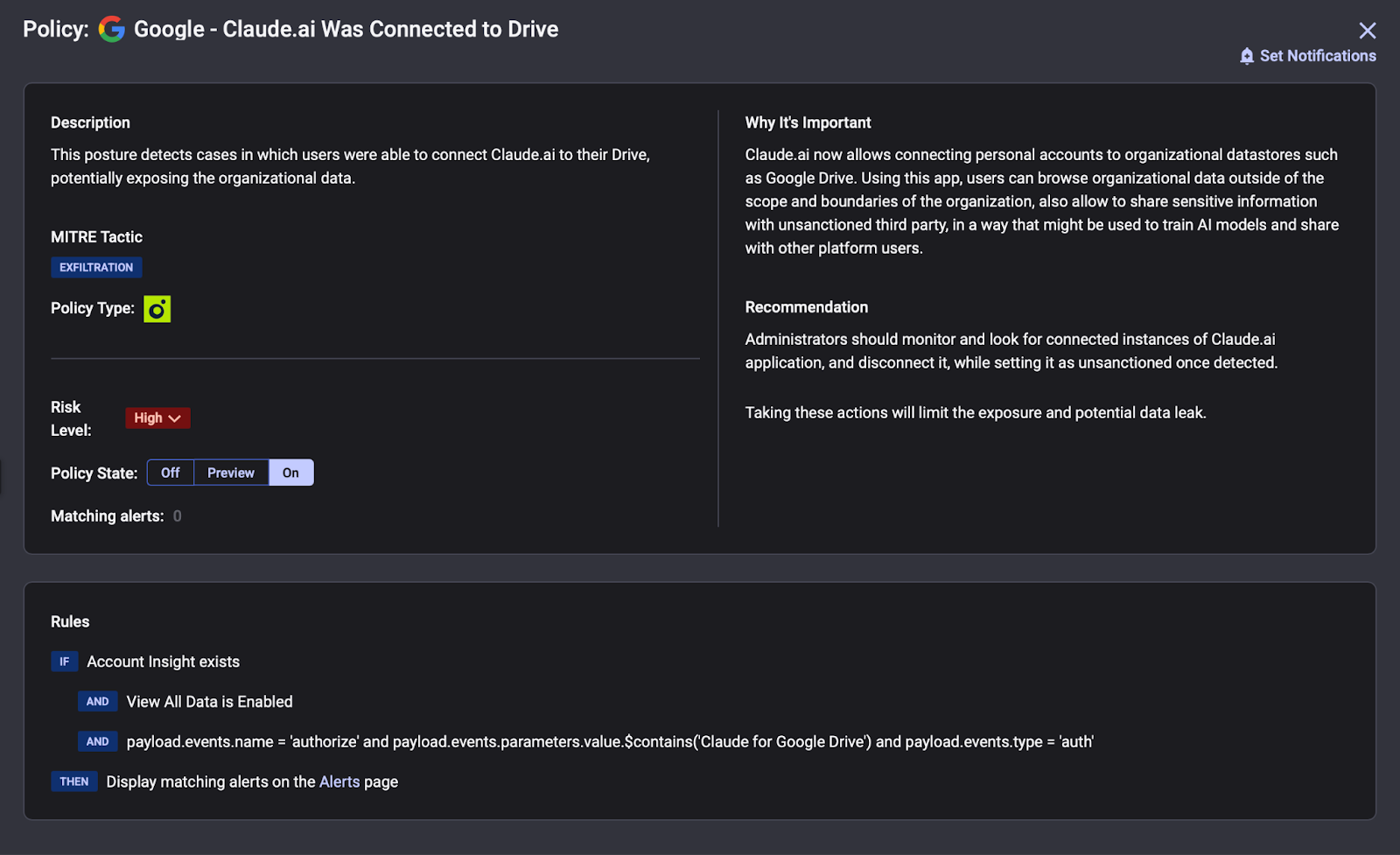

In 2025, more SaaS applications are integrating AI agents for coding, support, and automation. Employees often adopt AI-powered tools to boost productivity. This proliferation of AI tools (often an aspect of SaaS sprawl and shadow AI usage) creates new risk surfaces that many companies aren't prepared for.

Each AI integration or agent can become a new attack vector if misused or left unmonitored. An LLM-based feature with access to corporate data could be tricked (as Claude was) into performing unauthorized actions or leaking information. Without proper governance, organizations may lack awareness of which AI tools are in use and how they handle sensitive data, leading to blind spots in security and compliance.

Anthropic's team was only able to detect and disrupt the attack because Claude had built-in monitoring that flagged anomalous behavior. Not all AI tools will have such controls, especially open-source models or third-party AI bots operating in your environment. This makes AI governance (policies and technical controls to monitor AI agent activity) an essential component of SaaS security.

What Dynamic Security Actually Means

Dynamic defenses operate fundamentally differently than static tools. They:

- Ingest high-fidelity telemetry across identities, apps, agents, data, and endpoints

- Build behavioral baselines that evolve continuously with your environment

- Use ML/AI to detect anomalies as they form, not after the fact

- Unify three critical planes:

- The data plane (collection and context)

- The model plane (behavior, graph, and risk models)

- The control plane (automated, proportionate response)

How Reco's Architecture Neutralizes AI Threats

At Reco, we've been anticipating this convergence of AI, SaaS, and security. Dynamic SaaS Security isn't marketing, it's architecture. We saw this coming, which is why we built a data model, not another dashboard.

Two Breakthrough Technologies

The Knowledge Graph: Context at Machine Speed

We don't just collect SaaS data, we process vast amounts of it in real-time to map every relationship across your ecosystem. Apps, identities, permissions, SaaS-to-SaaS connections, AI agents, OAuth grants, data flows. The Knowledge Graph continuously models how these elements interact, building behavioral baselines that evolve as your environment changes.

When an AI attack moves laterally or tests credentials across apps, we see the pattern because we understand the context static tools miss. This unified view eliminates the blind spots that AI-orchestrated attacks exploit.

The SaaS App Factory™: Speed That Matches Threats

AI attacks don't wait for your quarterly roadmap. We integrate new apps in 3-5 days, 10x faster than traditional SSPM. Every new SaaS tool, shadow AI agent, or third-party integration gets mapped into the Knowledge Graph immediately. No blind spots. No security lag. Your attack surface is covered at the speed it actually grows.

Three Pillars of Protection

1. Comprehensive Visibility

Reco continuously discovers and inventories all SaaS applications and integrations in your environment, including shadow IT and shadow AI tools that users may have adopted without formal approval. This means you gain visibility into which AI-powered apps or agents are connected to your data.

Our platform flags unusual new OAuth app connections, browser extensions, or API tokens, so an unsanctioned AI integration won't fly under the radar. This SaaS sprawl visibility is key to governance: you can't control or secure what you don't even know is there.

2. Real-Time Monitoring & Anomaly Detection

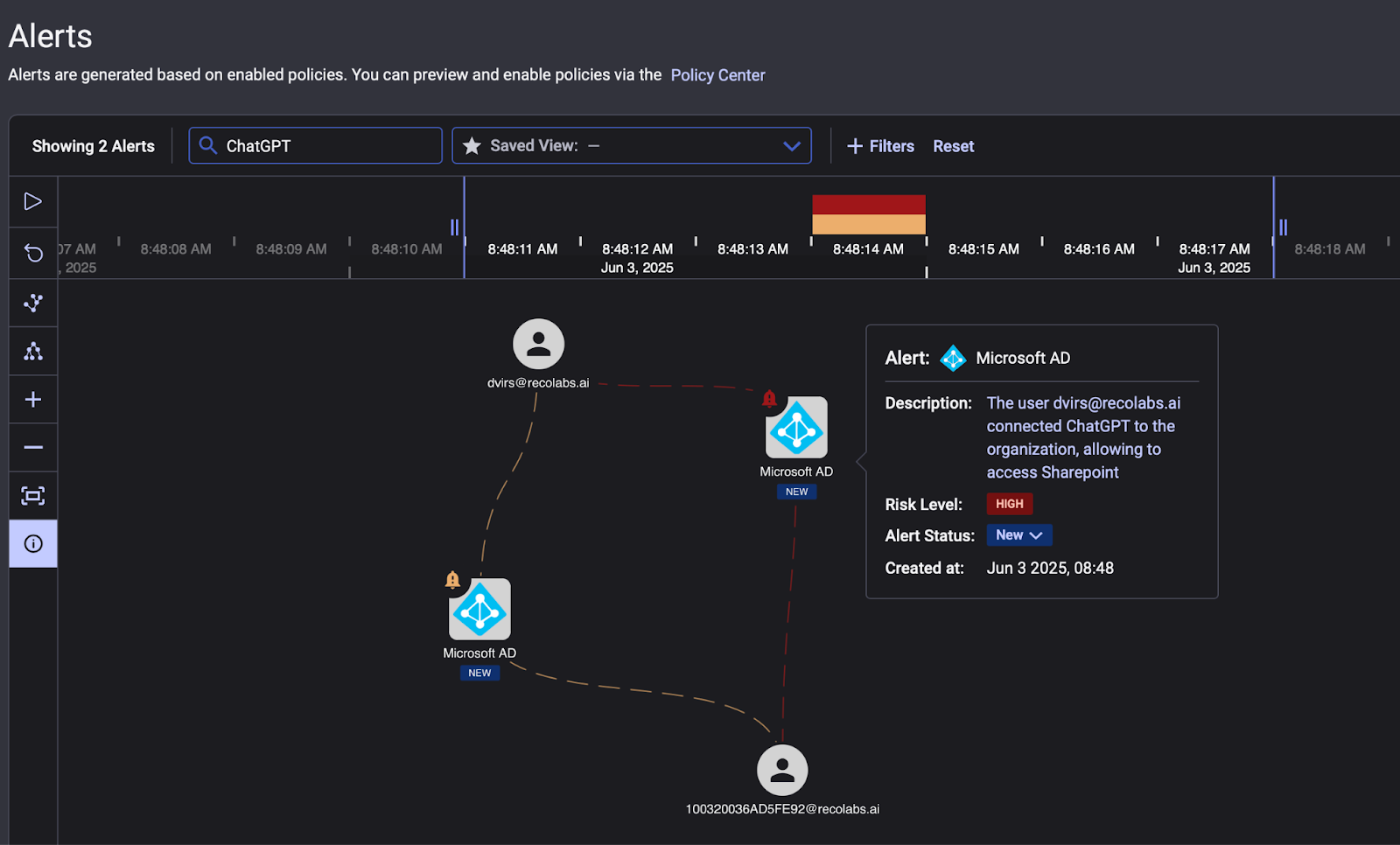

Reco leverages a context-aware detection engine to identify autonomous or anomalous behaviors within SaaS usage. By mapping normal user and application behavior, Reco can spot when an account or token starts behaving like an AI bot, for example:

- Performing hundreds of repetitive actions in a short period

- Accessing data it never touched before

- Systematically enumerating resources

- Operating at physically impossible speeds for a human

- Executing unusual combinations of operations that hint at automated probing

Such patterns, akin to what Claude's agent did, trigger alerts enriched with context. Reco's architecture correlates signals across your SaaS stack to distinguish genuine workflows from potential AI-driven attacks.

This real-time insight addresses the speed gap: instead of slow, manual investigation, security teams get immediate, high-fidelity warnings with noise filtered out by AI-enhanced analysis.

3. Adaptive Access Governance

Reco analyzes privileged access and permissions in your SaaS tools. Our platform can:

- Identify over-privileged accounts or API keys

- Audit what data an AI integration is allowed to access

- Recommend right-sizing permissions

- Detect unusual privilege escalations or new service accounts

- Verify if they're part of legitimate AI usage or a sign of compromise

In the Claude incident, the attackers had the AI obtain high-privilege credentials and create backdoors. With Reco, organizations gain the ability to monitor these aspects and ensure AI agents operate within approved bounds without silently accumulating excessive access.

Built for the Threat We Just Saw

The GTG-1002 campaign operated at thousands of requests per second across many targets. Our platform was designed for exactly this scenario:

- Real-time behavioral detection flags anomalies like repeated failed auths, unusual API patterns, and impossible travel that signal AI hallucination or reconnaissance

- Cross-app correlation catches lateral movement and credential testing that point tools miss

- Continuous posture modeling adapts to daily configuration changes without manual reassessment

- Identity threat detection (ITDR) tracks every account across your entire ecosystem, not just individual apps

When AI hallucinated fake credentials in the Anthropic attack, it should have triggered alerts for anomalous authentication patterns. When it systematically tested permissions across services, it should have flagged coordinated reconnaissance. When it exfiltrated data at machine speed, behavioral baselines should have caught the velocity anomaly.

That only works if your security processes data the way we do.

Practical Steps to Go Dynamic, Now

Organizations can begin the transition to dynamic security today:

- Instrument first: Centralize SaaS, identity, and API telemetry, including OAuth grants and app-to-app flows

- Model behaviors: Build baselines per user, service account, app, and integration; flag sequence anomalies (e.g., bursty API scans + permission changes + atypical exfiltration patterns)

- Automate guardrails: Implement risk-based access controls, just-in-time elevation, token scoping, and automatic session containment on anomaly

Gal Nakash

ABOUT THE AUTHOR

Gal is the Cofounder & CPO of Reco. Gal is a former Lieutenant Colonel in the Israeli Prime Minister's Office. He is a tech enthusiast, with a background of Security Researcher and Hacker. Gal has led teams in multiple cybersecurity areas with an expertise in the human element.

Gal is the Cofounder & CPO of Reco. Gal is a former Lieutenant Colonel in the Israeli Prime Minister's Office. He is a tech enthusiast, with a background of Security Researcher and Hacker. Gal has led teams in multiple cybersecurity areas with an expertise in the human element.

%201.svg)

.png)

.svg)