The Rise of Agentic AI Security: Protecting Workflows, Not Just Apps

Artificial intelligence is entering a new phase where agents no longer wait for prompts but take initiative, make decisions, and complete entire workflows on their own. These autonomous systems interact with data, tools, and users across multiple environments, often faster than humans can supervise. This new reality demands a different kind of protection focused on controlling how AI acts and ensuring that every autonomous process remains transparent and accountable.

What Agentic AI Security Means for Modern Workflows

Agentic AI security is a specialized field that integrates cybersecurity principles with AI governance and operational control. Its purpose is to secure the actions, decisions, and data interactions of autonomous agents that operate across digital ecosystems. It verifies each step an agent takes, ensures permissions align with intent, and maintains the integrity of every workflow influenced by AI.

Why Agentic AI Security is Critical for Enterprises

Agentic AI is changing how enterprises operate by enabling systems that act, decide, and coordinate across multiple platforms without constant supervision. This autonomy introduces new exposure points that traditional controls cannot manage effectively. Enterprises now need security that understands intent, context, and the sequence of automated actions to keep operations both efficient and safe. Maintaining this balance requires strong agentic security posture management, where policies, workflows, and behavioral baselines evolve in sync with intelligent automation.

Proactive Defense Against Workflow Exploits

Autonomous agents can be manipulated through subtle input changes or hidden command patterns that redirect their actions. Agentic AI security establishes real-time behavioral baselines for agents and flags deviations before they cause harm. By focusing on workflow integrity rather than isolated endpoints, security teams can identify and contain malicious activity at the process level. This shift from reactive defense to proactive oversight helps stop exploitation before it spreads across connected systems.

Driving Operational Efficiency Without Sacrificing Safety

Enterprises adopt agentic systems to accelerate workflows, reduce manual errors, and increase output. Agentic AI security enables this efficiency by embedding verification and authorization directly within each step of the workflow. Agents can act quickly, but only within predefined limits supported by continuous monitoring. This alignment enables automation to scale safely, maintaining compliance and risk management integrity as operational speed increases.

Addressing the Expanded Attack Surface of Agentic Systems

As autonomous agents connect with more tools, APIs, and data layers, they expand the potential attack surface far beyond traditional software boundaries. Agentic AI security narrows that surface by validating each interaction an agent makes, controlling tool permissions, and isolating high-risk functions. It also tracks how agents communicate with one another to prevent chain-based exploits that could move laterally through multi-agent environments. This layered approach maintains control over complex digital ecosystems while enabling the benefits of autonomy.

Governance and Oversight for Agentic AI

Strong governance ensures that intelligent systems remain aligned with enterprise goals. As AI begins handling more operational decisions, oversight must ensure transparency, control, and accountability. The table below highlights the key pillars that support responsible management and risk control across AI-driven workflows:

Security Measures Tailored to Agentic AI

Agentic AI introduces security needs that extend beyond conventional controls. Protection must now follow every agent action, data movement, and decision path. The following measures reflect how security adapts to the nature of these intelligent systems:

- Enforcing Identity and Access Controls Across Agents: Identity and access management are essential when multiple AI agents operate with varying permissions. Each must have a verified digital identity and restricted access to only the data and tools needed for execution. Continuous validation and centralized identity monitoring help detect misuse and maintain full traceability across the system.

- Protecting Data Flow Integrity in Autonomous Pipelines: Agentic workflows rely on constant data exchange, which must remain accurate and untampered. Encryption, digital signatures, and source validation protect against manipulation during transfer, while data lineage tracking keeps every dataset accountable throughout its lifecycle.

- Applying Real-Time Threat Detection to AI-Driven Workflows: Threat detection now depends on understanding behavior, not static patterns. Security systems profile normal agent activity and flag anomalies that may signal manipulation or unauthorized use. Machine learning models correlate deviations across agents, while automated responses isolate risks before workflow integrity is compromised.

- Anticipating Security Challenges of Multi-Agent Ecosystems: Collaboration among agents expands the attack surface through shared information and dependencies. Security controls must govern communication and limit shared state data to prevent cross-agent exploitation. Segmentation, restricted channels, and pre-deployment simulations reduce cascading effects and keep multi-agent operations stable as they scale.

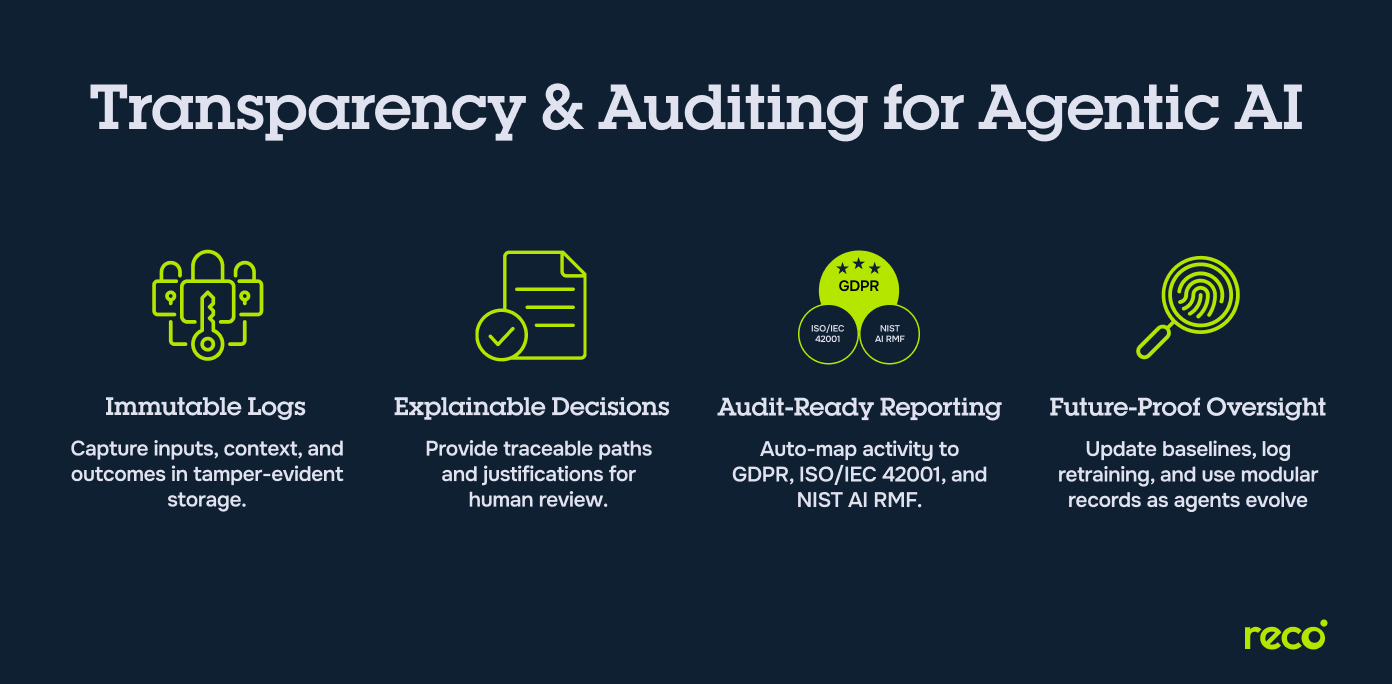

Transparency and Auditing of Agentic AI Workflows

Accountability in agentic systems depends on clear visibility into every decision, action, and data exchange. Effective auditing ensures that AI-driven operations remain explainable, compliant, and ready for inspection at any point in time.

- Maintaining Immutable Logs of Agent Decisions and Actions

Every decision made by an agent should be recorded in tamper-proof logs that capture inputs, context, and outcomes. Immutable storage prevents alteration and supports forensic review during incident analysis or compliance checks. - Ensuring Explainability in AI Outputs and Recommendations

Explainability mechanisms translate complex agent reasoning into clear narratives that humans can interpret. Providing traceable decision paths and justification models helps auditors and security teams verify that outcomes align with approved logic and policies. - Enabling Audit-Ready Reporting for Compliance Standards

Continuous documentation of AI activity enables immediate alignment with regulatory frameworks such as GDPR, ISO/IEC 42001, or the NIST AI RMF. Automated reporting tools simplify compliance reviews and provide standardized audit trails without manual intervention. - Building Future-Proof Audit Models for Evolving AI Behaviors

As AI agents learn and adapt, auditing methods must evolve with them. Future-proof audit systems rely on dynamic baselining, automated retraining logs, and modular record structures that accommodate new agent behaviors without disrupting oversight continuity.

Risk Mitigation in Agentic AI Security

Mitigating risks in agentic environments requires focusing on how agents interpret prompts, interact with tools, and retain information over time. The following measures address the main exposure areas in autonomous AI operations.

- Preventing Prompt Injection Exploits: Attackers can manipulate agent behavior by embedding hidden commands within natural language inputs. Input validation, output filtering, and strict context separation help prevent agents from executing injected instructions. Regular prompt audits further reduce this threat by identifying vulnerable patterns.

- Containing Tool Misuse by Autonomous Agents: Misuse occurs when agents call external tools or APIs beyond their intended purpose. Access restrictions, execution whitelists, and sandboxed environments limit tool availability to approved contexts. These constraints ensure that automation remains predictable and under policy control.

- Defending Against Memory Poisoning in Long-Term AI Systems: Agents that learn from prior sessions risk inheriting corrupted data or malicious instructions. Periodic memory resets, source verification, and training data integrity checks prevent contamination and maintain model reliability over time.

- Aligning Goals to Prevent Malicious or Unintended Outcomes: Goal misalignment can lead agents to pursue harmful shortcuts that meet performance objectives but break compliance or safety rules. Clear objective functions, regular performance reviews, and simulation testing confirm that agent behavior remains consistent with enterprise intent.

Best Practices for Agentic AI Security

Establishing best practices for agentic AI ensures that innovation stays aligned with control. The table below summarizes foundational principles that strengthen reliability, oversight, and compliance across AI-driven workflows:

How Reco Strengthens Agentic AI Security for Workflows and Apps

Reco extends agentic AI security beyond applications to the workflows that connect them. Its platform combines visibility, detection, and automation to keep intelligent systems secure without reducing efficiency.

- Provides Unified Visibility Into AI Workflows and Data Flow: Reco maps every workflow action, permission, and data transfer in real time. This visibility helps security teams identify unmonitored agent interactions and maintain continuous awareness of how data moves across connected systems.

- Detects Identity Misuse and Unauthorized Workflow Actions: The platform correlates user and agent identities to detect impersonation, privilege escalation, or unsanctioned activity. Immediate alerts and response automation reduce the time between detection and containment.

- Automates Policy Enforcement Without Slowing Innovation: Reco enforces security and compliance policies directly within workflows. Its adaptive controls prevent unauthorized behavior while allowing approved automation to continue without interruption.

- Maintains Audit-Ready Logs for Compliance and Governance: Every action taken within AI workflows is recorded in immutable audit logs that meet enterprise and regulatory standards. These records provide evidence for audits, governance reviews, and compliance reporting with minimal manual effort, all supported by Reco’s advanced SaaS security platform designed to protect both applications and agentic workflows.

Conclusion

Agentic AI represents a powerful leap in automation, but it also redefines how security must be designed, managed, and measured. Protecting these intelligent workflows requires visibility into every action, accountability for every outcome, and a governance model that adapts as systems evolve. Practically, Agentic AI security is a new foundation for trust in digital operations. By combining oversight, monitoring, and policy-driven automation, organizations can ensure that intelligent agents operate safely, responsibly, and in alignment with enterprise and regulatory expectations.

How does Agentic AI security differ from traditional AI or SaaS security approaches?

Agentic AI security focuses on protecting autonomous workflows rather than isolated applications or models. While traditional security frameworks monitor endpoints or user accounts, agentic security governs how AI systems act across connected environments. It validates intent, controls tool usage, and ensures every agent action is traceable and compliant with enterprise policy.

What new compliance or regulatory frameworks are emerging to address Agentic AI risks?

Governments and standards bodies are introducing frameworks to guide responsible AI operations. The NIST AI Risk Management Framework, ISO/IEC 42001, and the EU AI Act define transparency, accountability, and explainability requirements for autonomous systems. These frameworks emphasize continuous monitoring, human oversight, and audit-ready documentation to manage risk across adaptive and self-learning AI workflows.

How can organizations measure the effectiveness of their Agentic AI security controls?

Evaluating the success of security controls requires measurable, operational indicators that reveal how well agentic workflows are managed and contained.

- Track anomaly rates: Reduced deviations in agent behavior reflect better policy enforcement.

- Measure response time: Shorter mean detection and containment intervals indicate stronger automation readiness.

- Assess policy compliance: Regularly review logs for unauthorized actions or policy breaches.

- Audit completeness: Confirm that every agent activity is logged, explainable, and verifiable.

- Test resilience: Perform red-team simulations to validate defenses against prompt injection or workflow misuse.

In what ways does Reco extend protection to workflows driven by autonomous AI agents?

Reco enhances agentic AI security by delivering visibility, control, and compliance assurance across all connected workflows. It aligns automation speed with enterprise security expectations through adaptive policy enforcement.

- Unified visibility: Reco maps agent workflows and data transfers across SaaS ecosystems to maintain situational awareness.

- Identity correlation: The platform detects identity misuse, privilege escalation, and unauthorized workflow actions.

- Policy enforcement: Automated controls ensure agents execute only approved operations within defined boundaries.

- Audit readiness: Immutable logs and compliance reports maintain accountability across every agent action.

To understand how these capabilities integrate across enterprise workflows, explore Reco’s dedicated solution for Agentic AI security.

How does Reco help detect and mitigate advanced threats like memory poisoning or goal misalignment in Agentic AI systems?

Reco applies behavioral analytics and memory validation to ensure agents remain aligned with enterprise intent and policy constraints. It prevents long-term contamination and goal drift through continuous verification and controlled simulation.

- Memory integrity monitoring: Reco validates agent state changes and isolates suspicious or corrupted memory patterns.

- Data lineage verification: Training and feedback inputs are continuously checked for authenticity and source reliability.

- Behavioral correlation: Actions across multiple agents are analyzed to identify divergence or collusion.

- Alignment testing: Scenario simulations confirm that automated outcomes remain consistent with organizational goals.

Gal Nakash

ABOUT THE AUTHOR

Gal is the Cofounder & CPO of Reco. Gal is a former Lieutenant Colonel in the Israeli Prime Minister's Office. He is a tech enthusiast, with a background of Security Researcher and Hacker. Gal has led teams in multiple cybersecurity areas with an expertise in the human element.

Gal is the Cofounder & CPO of Reco. Gal is a former Lieutenant Colonel in the Israeli Prime Minister's Office. He is a tech enthusiast, with a background of Security Researcher and Hacker. Gal has led teams in multiple cybersecurity areas with an expertise in the human element.

%201.svg)

.png)

.svg)