ChatGPT API compliance focuses on how organizations securely and responsibly integrate and use the API while adhering to OpenAI’s usage policies, data protection standards, and legal requirements. Compliance involves building systemic processes, controls, and governance mechanisms that ensure data handling, security, monitoring, and model usage stay within authorized limits.

This guide provides a detailed, practical framework for teams creating, deploying, or maintaining production environments powered by the ChatGPT API.

Before implementing controls, teams must clearly understand what compliance means in the context of the ChatGPT API. OpenAI defines compliance as adhering to its Usage Policies, including restrictions on disallowed content, privacy commitments, and proper data handling practices. Beyond that, organizations must ensure API integrations meet internal governance standards for data security, logging, user consent, and access management while remaining audit-ready.

When integrated into enterprise workflows, the ChatGPT API becomes part of a broader compliance landscape. Applications that process sensitive business data, personally identifiable information (PII), or customer communications must ensure that API calls, responses, and metadata do not violate internal data residency or privacy mandates. Understanding this foundational compliance context is critical before building the technical implementation.

The first step toward compliance is defining what data will, and will not, be sent to the ChatGPT API.

OpenAI APIs process data transiently and retain API inputs and outputs for up to 30 days for abuse and misuse monitoring. However, for ChatGPT Enterprise and ChatGPT Team accounts, OpenAI does not retain API data, ensuring that no conversations or API calls are used for training or stored beyond processing. Teams should document clear data classification and boundary rules:

Data minimization is a compliance necessity, and only the data required to generate a relevant model response should leave your environment. Filtering mechanisms should be implemented at the API request layer through middleware or preprocessing pipelines.

Once data boundaries are defined, document them in an API compliance register - a living record of approved use cases, data categories, and access scopes. These boundaries are only effective when supported by secure network configurations and authentication mechanisms.

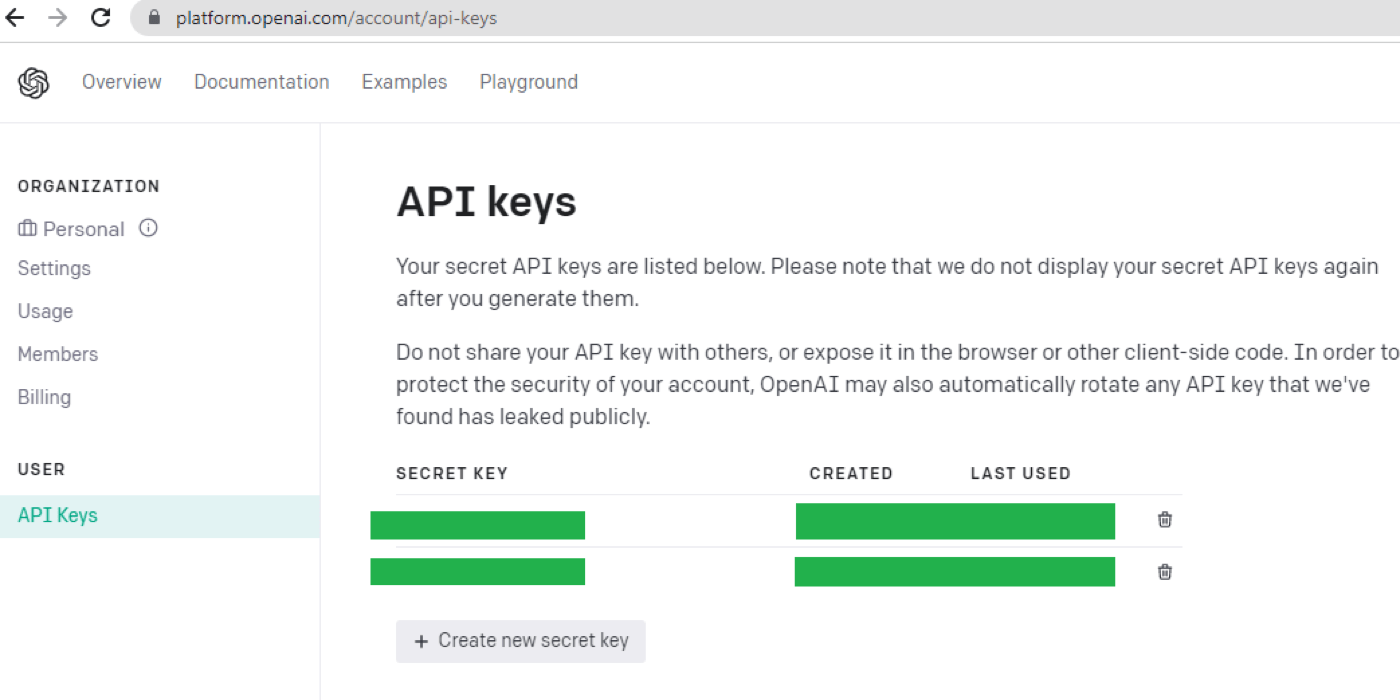

API security compliance begins with how requests to the ChatGPT API are authenticated and protected. OpenAI uses API keys for authentication, which must be handled as sensitive credentials. Best practices for secure key management include:

For production systems, route API calls through a secure proxy or gateway layer to enforce centralized policies, request throttling, IP allowlists, and structured logging - all essential for compliance and security.

With these controls in place, the next step is protecting and retaining data responsibly.

Compliance requires robust encryption both in transit and at rest. The ChatGPT API enforces TLS 1.2+ for all traffic, ensuring transport-level security. However, encryption must extend across the organization’s full data flow.

At-rest encryption considerations:

Retention policy configuration:

For most frameworks (SOC 2, GDPR, HIPAA), data retention limits must be clearly defined. Even though OpenAI’s enterprise APIs provide a secure data handling policy, organizations should define local retention rules and ensure that any stored ChatGPT API request or response data is deleted or archived according to policy.

Once secure handling is ensured, compliance also requires visibility and traceability.

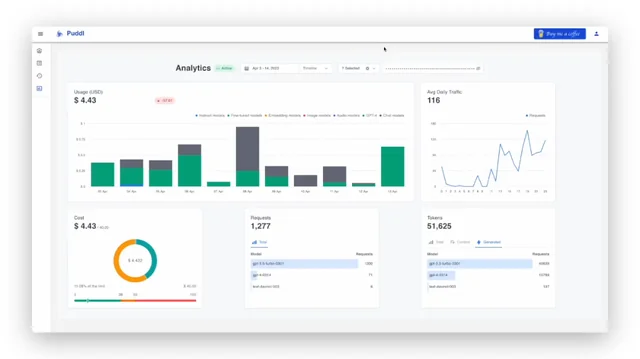

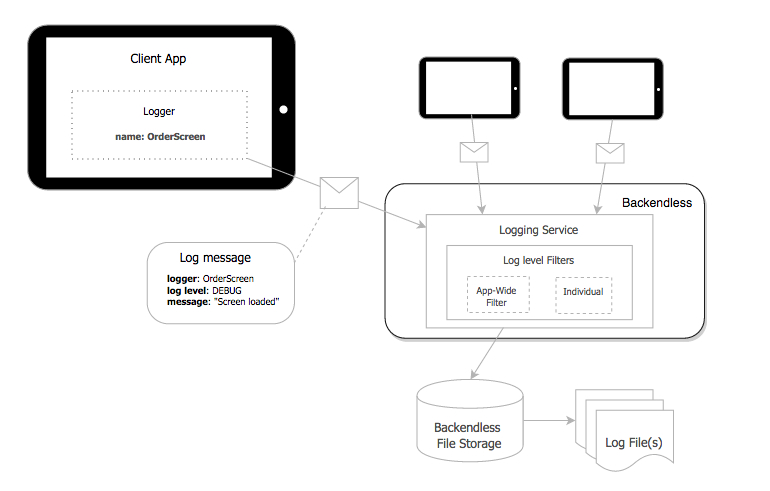

Compliance programs demand traceability - the ability to reconstruct when, how, and why an API was used. This requires systematic logging of all API activities in a secure, queryable format.

Essential logging fields include:

Logs should never include raw input or output data unless anonymized. Use centralized log aggregation tools (e.g., ELK Stack, Cloud Logging, or Splunk) with restricted access.

Audit readiness practices:

After logging and visibility are implemented, focus shifts to governance and policy enforcement.

ChatGPT API integrations should follow the same governance and access policies as other enterprise services. Role-based access control (RBAC) and governance policies prevent unauthorized use and align the API’s function with approved business cases.

Practical governance mechanisms:

Access policies should map consistently to enterprise Identity and Access Management (IAM) systems and be reviewed regularly. Governance then evolves into continuous monitoring and automation.

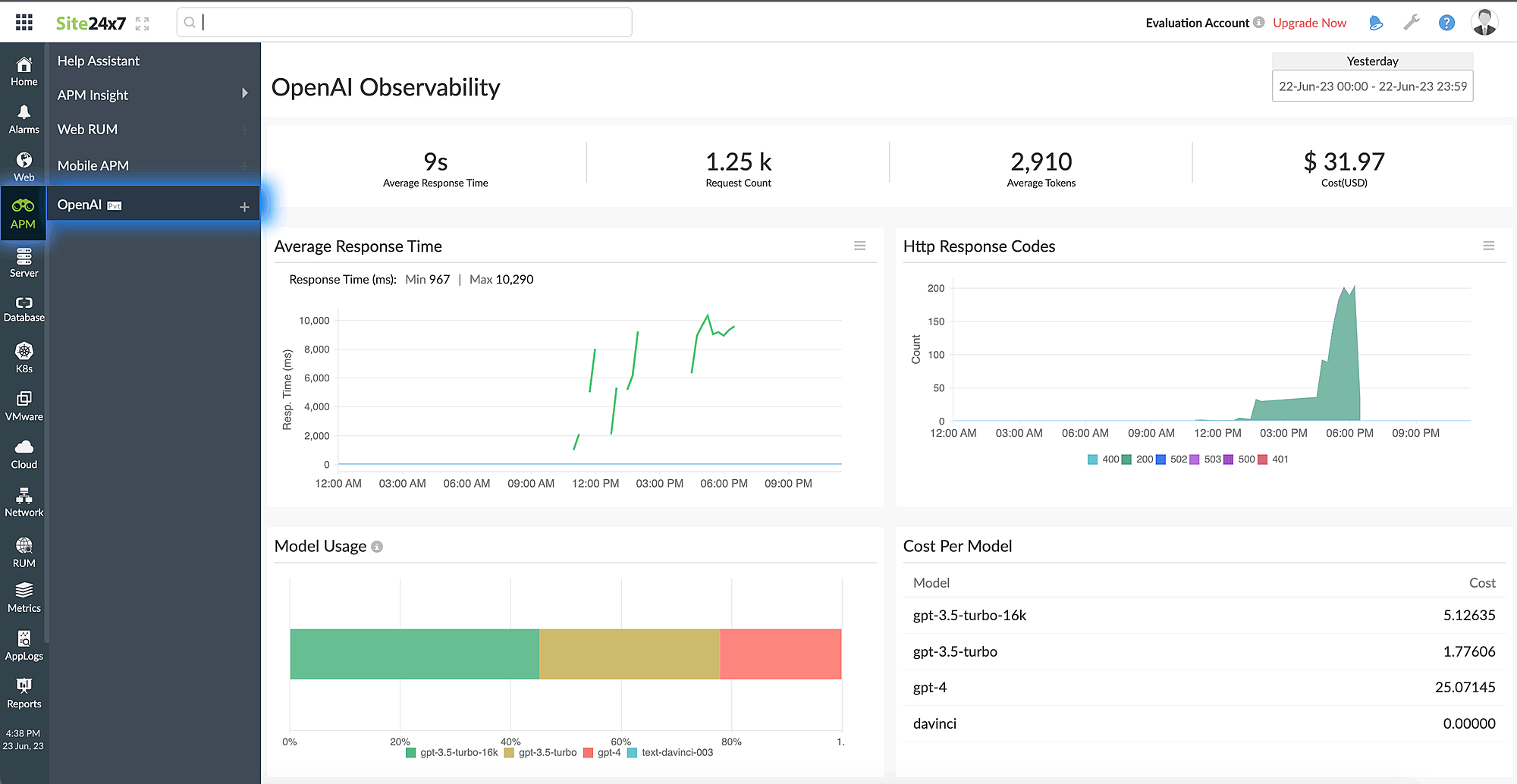

Compliance is not a one-time event. It requires ongoing monitoring and validation through automation. ChatGPT API configurations should be continuously checked for deviations or misuse.

Recommended automation checks:

Integrate compliance alerts into existing monitoring systems like Prometheus, CloudWatch, or SIEM platforms. Automated workflows can revoke access, disable keys, or alert compliance teams when anomalies are detected.

With automation established, the next step is ensuring organizational readiness for audits.

Compliance readiness requires clear documentation and evidence available for auditors and regulators.

Required documentation includes:

Training requirements:

Teams should be trained on OpenAI usage policies, data classification, and escalation procedures for compliance issues.

Training should be updated as OpenAI releases new API features or modifies policy terms. Documented completion records are essential for SOC 2 or ISO 27001 audits.

OpenAI aligns with enterprise-grade frameworks such as SOC 2 Type II, ISO 27001, and GDPR, ensuring its infrastructure meets global security standards.

Key alignment steps:

At this stage, your organization reaches operational compliance maturity, where the API runs under controlled, monitored, and policy-aligned conditions.

Building ChatGPT API compliance requires a clear, structured approach that balances security, governance, and operational oversight. The following table provides a quick summary of the eight key steps outlined in this guide. Each step represents a layer of protection designed to ensure that API usage aligns with OpenAI’s policies, enterprise governance standards, and evolving regulatory requirements:

Achieving ChatGPT API compliance requires structured governance, disciplined implementation, and continuous oversight. By establishing strong controls around data flow, authentication, logging, and monitoring, enterprises can ensure that their use of the ChatGPT API meets both OpenAI’s standards and internal compliance obligations.

Compliance should be treated as a living framework - reviewed, refined, and reinforced as APIs evolve and organizational needs change.

Apply strict data minimization and anonymization before transmission.

See Reco’s Data Exposure Controls.

Capture only essential metadata for traceability while enforcing anonymization.

Learn more from Reco’s Compliance and Audit Visibility Framework.

Reco continuously monitors API usage for policy adherence and sensitive data exposure.

Discover Reco’s AI Data Protection Capabilities.