Top 10 AI Security Tools for Enterprises in 2026

AI security tools for enterprises have shifted from optional enhancements to essential controls as organizations adopt LLMs, internal copilots, and AI agents across SaaS and cloud environments. Traditional detection methods cannot fully track how these systems interact with identities or sensitive data, so teams need security platforms that understand AI behavior in context.

This shift has created a focus on solutions that strengthen SaaS access governance, protect LLMs at runtime, and detect threats across cloud and data infrastructure with precision. These capabilities now form the foundation of modern enterprise AI security strategies.

10 Best AI Security Tools Enterprises Should Evaluate

The tools below represent leading approaches to securing enterprise use of AI, from monitoring model behavior to controlling data access and improving visibility across SaaS and cloud environments. Each offers a distinct method for managing the risks that emerge as AI becomes part of everyday workflows.

1. Reco

Reco offers an AI security and governance platform that monitors identities, permissions, and data interactions across SaaS and AI systems. It maps user actions, embedded AI features, and LLM activity to identify abnormal behavior and reduce insider-driven risk. The platform operates through API based integrations and requires no agents, which supports rapid enterprise deployment. Reco also monitors AI usage patterns and flags activity that deviates from expected identity or data access behavior across LLM-powered workflows.

Best for: Enterprises that need centralized visibility into SaaS activity, emerging AI features inside business applications, and identity-linked behaviors that influence how data is accessed or shared.

Pricing: Quote-based on users and the number of integrations, offered directly and through the AWS Marketplace.

2. Lasso Security

Lasso Security is a GenAI-first cybersecurity platform designed to protect enterprise generative AI and LLM usage. Its offerings include a secured gateway for LLMs (intercepting API calls), browser and application integrations to track AI usage, and real-time detection, logging, masking, or blocking of risky interactions such as data leaks, prompt injection, or unauthorized data sharing.

Best for: Organizations deploying internal or customer-facing generative AI that require oversight of AI interactions, data flow control, and compliance across SaaS, cloud, and custom AI applications.

Pricing: Lasso does not publish a single fixed price. According to its website, pricing is custom and provided via quote.

3. Noma Security

Noma Security provides an AI security and governance platform that protects enterprise AI across models, data pipelines, SaaS applications, LLMs, and autonomous agents. It discovers AI assets across environments, delivers AI Security Posture Management, and applies runtime protections that address prompt injection, model manipulation, and unsafe agent behavior. Noma also supports compliance workflows and continuous monitoring for organizations that adopt generative AI at scale.

Best for: Companies that need deep visibility into AI assets and runtime protections across the full AI lifecycle.

Pricing: Noma uses a subscription-based SaaS model. On its AWS Marketplace listing, pricing is provided via contract, but enterprises must request a quote for purchase.

4. Aim Security

Aim Security delivers a unified AI security platform built for enterprises using generative AI tools. It provides an AI-Firewall for runtime protection, AI-Security Posture Management (AI-SPM) to discover and inventory AI assets, and continuous detection of threats like prompt injection, data leakage, or adversarial attacks targeting AI applications. Aim supports both third-party AI tools used by employees and custom internal AI agents or applications.

Best for: Organizations deploying public or private AI tools, building custom AI agents, or needing full-lifecycle protection and compliance across their AI environment.

Pricing: Contract-based pricing; details are provided via vendor quote.

5. Mindgard

Mindgard provides an AI security platform that evaluates and strengthens enterprise AI systems, including LLMs, generative models, multimodal architectures, and custom agents. It performs automated red teaming to identify issues such as prompt injection, model inversion, data poisoning, and evasion attempts. Mindgard also integrates with CI and CD pipelines and security operations so teams can assess model behavior and address AI-specific risks throughout development and production.

Best for: Organizations building or using AI/LLM applications who want to proactively detect and remediate AI-specific threats before deployment or in production.

Pricing: Quote-based. Mindgard offers custom pricing depending on deployment scope and enterprise needs.

6. Radiant Security

Radiant Security is an AI-powered Security Operations Center (SOC) platform that automatically triages, investigates, and responds to security alerts from any data source or sensor. It uses agentic AI to treat every alert - known or unknown - with human-level reasoning and scales threat detection across identity, network, cloud, email, endpoint, SaaS, and more. The platform also offers integrated log management with unlimited retention and fast querying.

Best for: Organizations that need to modernize security operations, cut alert fatigue, speed up incident response, and ensure comprehensive coverage across diverse IT and cloud environments.

Pricing: Custom / quote-based.

7. Lakera / LLM Guard

Lakera Guard is a runtime security and governance platform designed to protect generative-AI applications and large language models. It intercepts prompts and model outputs via a single API call, then applies real-time threat detection to block prompt injection, data leakage, malicious content, and unsafe outputs before they reach users or systems. Its model-agnostic, low-latency architecture supports multimodal LLMs and scales to high-volume enterprise deployments.

Best for: Enterprises deploying chatbots, internal AI assistants, or custom AI agents who require real-time guardrails, compliance controls, and content– or data-loss protection across all AI interactions.

Pricing: Quote-based.

8. CalypsoAI

CalypsoAI offers a comprehensive AI-security platform that protects generative AI applications and LLMs at inference time. It uses agentic red-teaming, real-time defense, and continuous observability to protect models, AI agents, and applications against threats like prompt injection, jailbreaks, data leakage, and adversarial attacks. The platform is model-agnostic, supports any LLM or AI system, and integrates with existing enterprise infrastructure (SIEM, SOAR, audit workflows).

Best for: Enterprises deploying or scaling generative AI across multiple tools, models, or agents, especially when they need runtime security, compliance controls, and risk-aware model evaluation.

Pricing: CalypsoAI does not publish fixed pricing; it offers custom enterprise licensing and quote-based plans.

9. Cranium

Cranium provides an AI governance and security platform that helps enterprises inventory, test, and protect their AI and ML ecosystems. It discovers models, datasets, and pipelines, creates an AI Bill of Materials, and performs automated evaluations to uncover unsafe behavior or configuration issues. Cranium also supports compliance workflows and third-party risk oversight for organizations that rely on both internal and external AI systems.

Best for: Enterprises that need clear visibility into AI assets and structured security testing across the AI supply chain.

Pricing: Quote-based.

10. Protect AI

Protect AI provides an AI security platform that helps enterprises secure models, pipelines, and runtime environments. Its products support model scanning, testing, red teaming, and continuous monitoring for risks such as prompt injection, data exposure, and unsafe model behavior. Protect AI also offers tools for managing the security posture of machine learning systems and for identifying issues across the AI supply chain.

Best for: Enterprises that need security coverage for the entire AI lifecycle, including development, evaluation, and production use.

Pricing: Quote-based.

AI Security Tools Comparison Table

Below is a structured comparison of the ten AI security platforms based on their primary focus, core AI or ML capabilities, deployment approach, and enterprise fit:

Essential Features in AI Security Tools for Enterprises

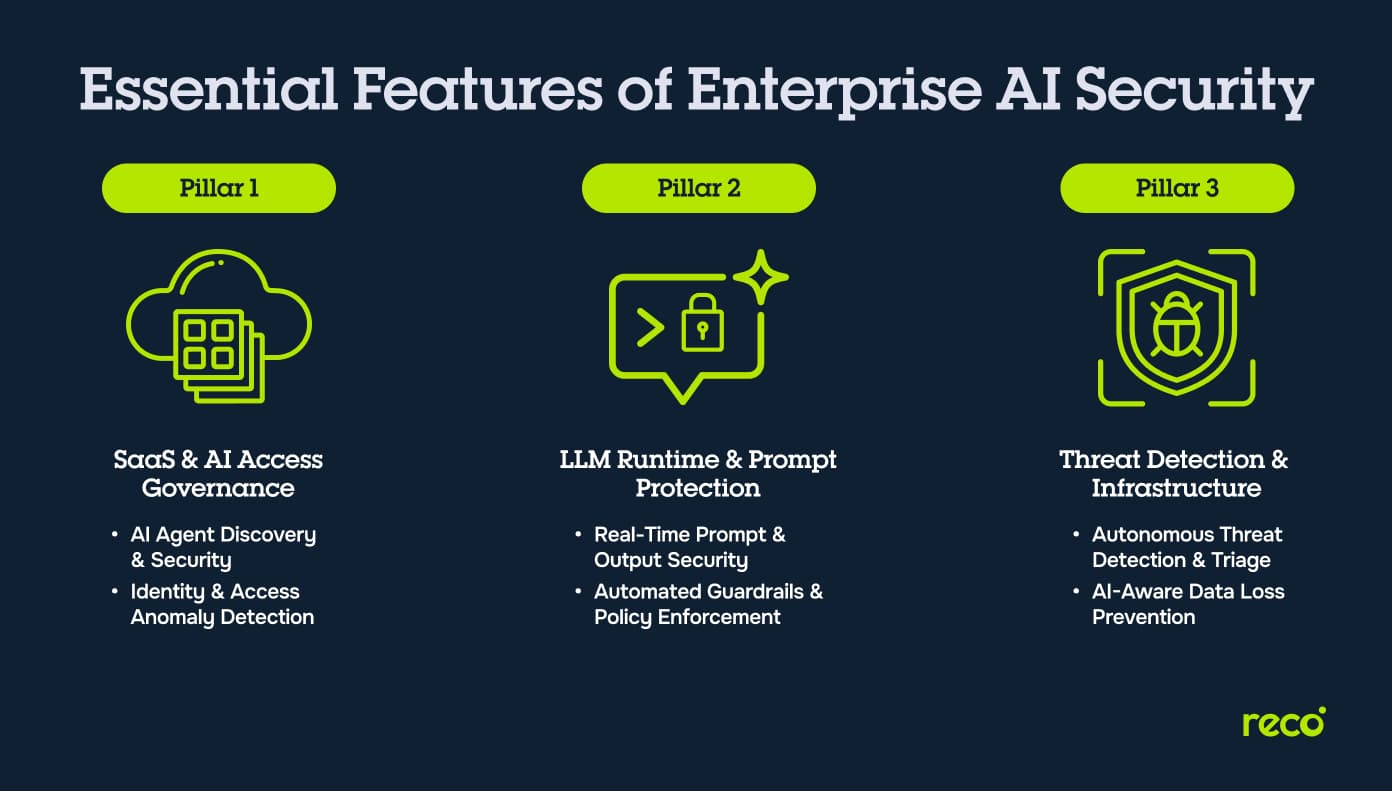

Enterprises evaluate AI security platforms by how well they control access, manage model interactions, and detect threats across SaaS and cloud environments. The three pillars below outline the capabilities that matter most.

Pillar 1: SaaS and AI Access and Governance

Modern SaaS environments now include embedded AI agents and features that require visibility and oversight. Tools in this category help organizations understand how users, identities, and data interact with AI across applications.

- Discovery and Security of SaaS AI Agents and Apps: Tools identify embedded AI features, hidden agents, and third-party AI apps across SaaS platforms to reveal data flows and restrict unapproved AI usage.

- Identity and Access Anomaly Detection: Platforms analyze user behavior to find irregular access patterns, unusual permissions, and identity changes that may indicate misuse or unintended data exposure.

Pillar 2: LLM Runtime and Prompt Protection

Prompt-based interactions require active monitoring because risks surface at the moment of input and output. These features protect models, users, and data during real-time exchanges.

- Real-Time Prompt and Output Security: AI security platforms evaluate prompts and responses to detect prompt injection, hidden instructions, unsafe content, or sensitive information before it is processed or returned.

- Automated Policy Enforcement and Guardrails: Systems apply organizational rules to each interaction, blocking or modifying prompts or outputs that conflict with internal policy or compliance requirements.

Pillar 3: Threat Detection and Infrastructure

AI adoption expands the attack surface across cloud, identity, and data systems. Tools in this pillar help security teams detect operational risks early and understand how threats move through the environment.

- Autonomous Threat Detection and Triage: Platforms use artificial intelligence to detect suspicious activity across cloud workloads, identities, and networks, then highlight the events that require immediate attention.

- Data Loss Prevention with AI Context Awareness: AI-driven DLP tracks how information moves through SaaS platforms and leverages AI features to identify risky transfers or unusual access patterns before sensitive data leaves the environment.

How to Evaluate the Right AI Security Tool for Your Organization

Selecting an AI security platform requires a structured assessment of how well each product aligns with your internal AI usage, risk areas, and operational needs. The table below organizes the key evaluation criteria with clear guidance for each:

Why Reco Stands Out Among AI Security Tools for Enterprises

Reco focuses on understanding how people interact with data across SaaS and AI systems. Its platform analyzes identities, permissions, behavioral patterns, and data access to reveal risks that cannot be seen through event logs alone.

- Unified Understanding of Human to Data Interactions: Reco builds a graph of users, identities, permissions, and data access events to show how people interact with information across SaaS and AI-enabled applications.

- AI-Driven Insight Into Identities, Access, and Sensitive Actions: The platform applies artificial intelligence to evaluate identity context, unusual access paths, and sensitive actions that may indicate risk.

- Contextual Insider Risk Protection Beyond Alerts: Reco correlates behavior, identity attributes, and data exposure patterns to provide context-driven insight rather than isolated notifications.

- Detection of Abnormal LLM, SaaS, and Data Flows: Reco monitors how users engage with SaaS systems and AI features, including LLM-powered workflows, to identify interactions that deviate from normal patterns.

- Low-friction, Agentless Deployment for Rapid Time to Value: Reco connects through API-level integrations and requires no agents, which allows organizations to deploy the platform quickly across their environment.

Conclusion

Enterprise AI is evolving faster than most security programs, which makes the choice of the right security platform increasingly important. The tools reviewed here reflect a broader movement toward solutions that can interpret behavior, manage model interactions, and control how information flows through AI-enabled systems. As organizations expand their use of generative AI, the most effective path forward comes from selecting platforms that align with real operational patterns and that can adapt as new AI-driven workflows appear.

How do AI security tools differ from traditional threat detection platforms?

AI security tools focus on risks created by model behavior and AI-enabled workflows, while traditional platforms monitor infrastructure and event anomalies. Key differences include:

- Insight into prompt-level behavior and model interactions

- Visibility into AI usage inside SaaS applications

- Analysis of identity context paired with LLM activity

- Monitoring of data movement generated by AI tools

What challenges do enterprises face when securing internal use of AI and LLMs?

Enterprises encounter operational and oversight challenges when AI adoption expands across teams. Common issues include:

- Limited visibility into real LLM usage

- Difficulty tracking sensitive data flowing into prompts

- Unmanaged AI-enabled features inside SaaS applications

- Shadow AI and unauthorized tools

- Rapid change in model behavior that outpaces existing controls

How can organizations ensure AI-driven detections are accurate and not false positives?

Organizations strengthen detection accuracy by grounding evaluations in real workflows. This includes reviewing identity behavior, data paths, and model usage together rather than separately. Human validation loops, periodic recalibration, and practical testing with real prompts help refine detection logic and reduce noise without reducing coverage.

How does Reco use AI to detect insider threats and abnormal identity behaviors?

Reco analyzes how users interact with data, identities, and AI features to identify activity that deviates from normal patterns. Its approach includes:

- Mapping permissions, roles, and sensitive actions

- Recognizing unusual access paths or behaviors

- Linking LLM and SaaS interactions to identity context

- Highlighting patterns that may indicate insider risk

Can Reco secure enterprise adoption of generative AI tools and unmanaged LLM usage?

Reco supports oversight of generative AI by monitoring how employees use AI features inside SaaS platforms and by identifying high-risk interactions with LLM-powered workflows. Its visibility helps organizations understand where sensitive data may move, how identity context influences AI usage, and which behaviors require closer review.

.svg)