The SaaS Attack Surface Just Expanded to Clawdbot

Clawdbot went viral over the weekend of January 24–25, 2026. Within days, it became the most talked-about AI tool in developer and tech circles, promising something different: a fully local, open-source AI personal assistant that connects to WhatsApp, Telegram, email, and calendars to handle life admin autonomously.

But just as quickly, security researchers started raising alarms. Blockchain security firm SlowMist identified a gateway exposure putting hundreds of API keys and private chat logs at risk, and security researcher Jamieson O'Reilly found hundreds of Clawdbot Control servers exposed to the public internet, many with no authentication at all.

What Makes Clawdbot Different

Most shadow AI concerns focus on employees pasting sensitive data into ChatGPT or other cloud-based tools, which is a real risk. Samsung learned this the hard way in 2023 when engineers leaked source code, meeting transcripts, and chip testing sequences into ChatGPT within 20 days of getting access. The company eventually banned generative AI tools entirely.

Clawdbot operates on a different level. Rather than a browser-based chatbot you interact with during work hours, it's an always-on autonomous agent with full system access to your machine. It can read and write files, execute shell commands, run scripts, and control browsers. It stores credentials in plaintext configuration files on your local disk, including API keys, OAuth tokens, and bot secrets. It can also connect to your most sensitive communication channels: email, messaging apps, calendars.

The project's own documentation acknowledges the risk: "Running an AI agent with shell access on your machine is… spicy. There is no 'perfectly secure' setup."

How the Authentication Bypass Works

One of the core issues is an authentication bypass that occurs when Clawdbot's gateway runs behind a reverse proxy. The system is designed to automatically trust localhost connections without authentication. But most deployments place the gateway behind nginx or Caddy on the same server, so all connections appear to come from localhost and get automatically approved. Because of this, external attackers effectively get treated as local users.

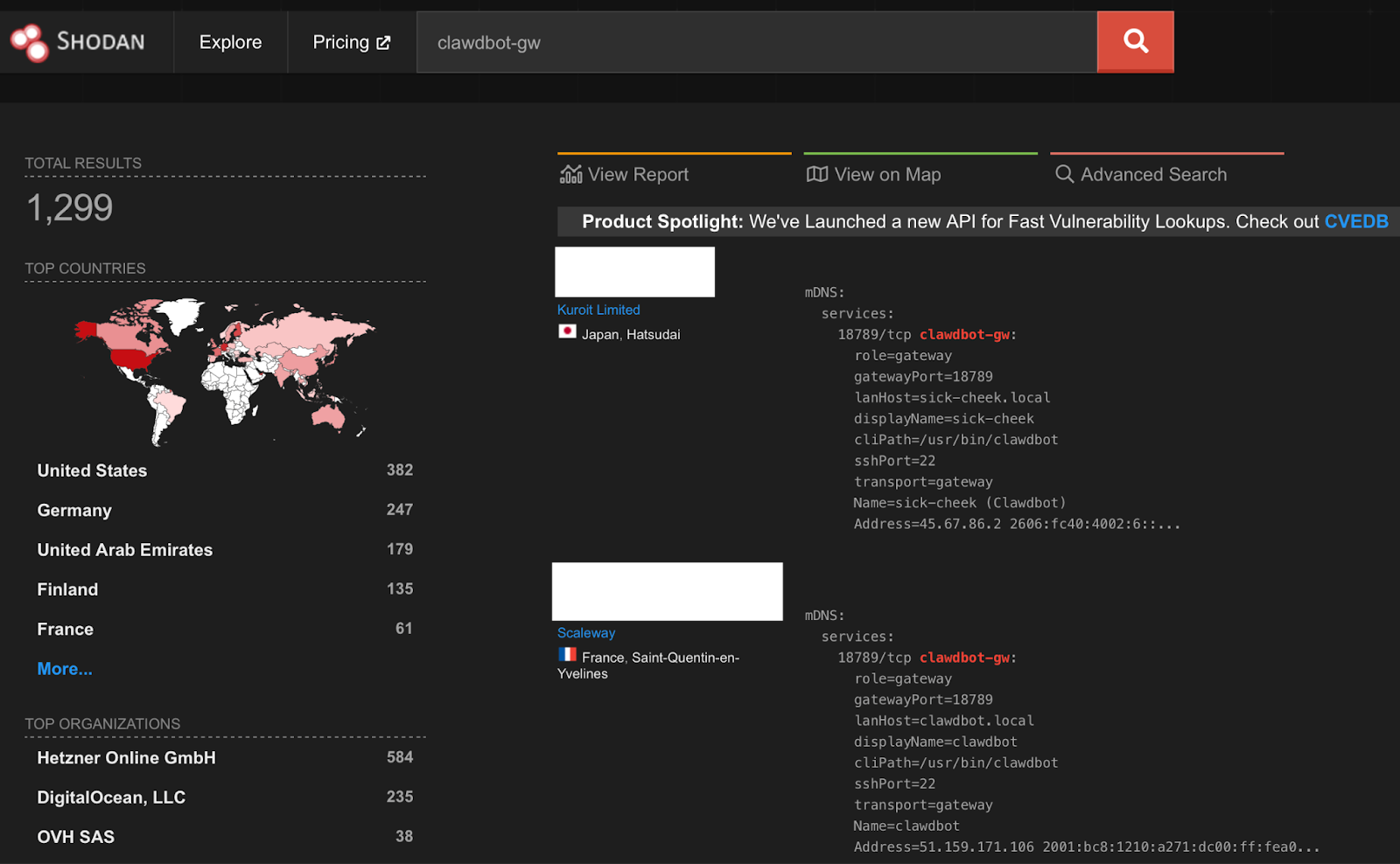

At the time of writing, a Shodan search for "clawdbot-gw" returns 1,299 exposed instances, with the highest concentrations in the United States (382), Germany (247), and the United Arab Emirates (179). Different search queries and scanning tools have returned varying counts, with some researchers reporting even higher numbers.

Misconfigured instances can expose:

- Plaintext credentials (API keys, bot tokens, OAuth secrets, signing keys)

- Full conversation histories across all connected chat platforms

- The ability to send messages as the user

- Remote command execution on the host machine

The attack surface also extends further. Because Clawdbot has permission to read emails and execute system commands, every inbound message becomes a potential attack vector. A prompt injection delivered via email or chat could grant an attacker the same access the agent has: full control of the machine.

Why This Matters for Enterprise Security

An uncomfortable reality is that your employees may have already installed Clawdbot. Its virality wasn't contained to hobbyist developers. It spread through tech circles, startup communities, and productivity enthusiasts, the same populations that overlap with your workforce.

If even one employee connected Clawdbot to their work email, granted it access to corporate calendars, or stored company API credentials in its configuration files, you now have an unmanaged endpoint with autonomous access to sensitive systems, and, unlike traditional shadow IT, you probably have zero visibility into it.

The risk compounds because of what Clawdbot stores locally. Infostealers now have a centralized target. A Clawdbot installation might contain Slack tokens, Jira API keys, VPN credentials, email OAuth tokens, and months of conversation history, all in predictable file locations. We already know that it’s likely infostealer operators will begin targeting ~/.clawdbot/ directories.

Clawdbot vs. Traditional Shadow AI: A Risk Comparison

Risk Factor

Traditional Shadow AI (ChatGPT, etc.)

Clawdbot

Access level

Browser-based, cloud-processed

Full system access (shell, files, browser control)

Credential storage

Cloud-side (provider's servers)

Plaintext on local disk

Known incidents

Samsung source code leak (2023), OpenAI Redis bug exposed chat titles and payment info, 100K+ credentials stolen via infostealers

Hundreds of exposed instances leaking API keys, OAuth tokens, chat logs; prompt injection to RCE demonstrated in 5 minutes

Attack surface

Data leakage via prompts, prompt poaching via browser extensions

Direct RCE if misconfigured, prompt injection attacks, infostealer targeting

Persistence

Session-based

Always-on autonomous agent

Data exposure risk

Conversations potentially fed to training data, third-party breaches

Conversations + credentials + files + full system compromise

This isn't to minimize the risks of traditional AI tools but Clawdbot represents a qualitative shift. When an AI assistant has persistent access to your file system, credentials, and communication channels, a single misconfiguration or successful injection attack compromises the entire machine.

The Rebrand Won't Fix the Architecture

Shortly after the security concerns went public, Clawdbot rebranded to Moltbot following trademark concerns reportedly raised by Anthropic over the name's similarity to Claude. The agent persona is now called Molty instead of Clawd, though the ~/.clawdbot/ directory path and CLAWDBOT_* environment variables remain unchanged for backward compatibility at the time of writing this blog post.

Moltbot's documentation now includes detailed security guidance: using loopback bindings, configuring trusted proxies, implementing IP allowlists, rotating credentials after incidents. But these mitigations require exactly the kind of security expertise that most users adopting the tool don't have. The gap between "installed and working" and "installed and secure" is where breaches happen.

What Security Teams Should Do

First, establish visibility. You can't govern what you can't see. Determine whether Clawdbot or Moltbot is running in your environment by checking endpoint logs for characteristic directories (~/.clawdbot/, ~/clawd/, etc.) and scanning network traffic for Clawdbot Control signatures.

Second, assess the blast radius. If you find instances, understand what they had access to. Which credentials were stored? What systems were connected? What data flowed through the agent? Assume that anything accessible to the agent should be treated as potentially exposed.

Third, have a policy conversation with employees. Outright bans rarely work. Employees adopt shadow AI because it makes them more productive, and they'll find workarounds. Instead, focus on providing sanctioned alternatives that meet legitimate needs while maintaining security controls. If employees want AI assistance with email and scheduling, what can you offer that doesn't require giving an autonomous agent shell access to their machines?

Fourth, build ongoing detection. Clawdbot won't be the last tool like this. The agentic AI trend is accelerating, and each new tool will create similar tensions between capability and security. Your detection capabilities need to evolve to surface AI tools connecting to corporate systems, regardless of whether they're on an approved list.

How SaaS & AI Security Helps

The four steps above share a common dependency: visibility. You can't assess blast radius if you don't know what's connected. You can't have policy conversations without data on what employees are actually using and you can't build ongoing detection manually.

The challenge with Clawdbot, and the wave of agentic AI tools that will follow, is that traditional security wasn't built for this. Endpoint detection looks for malware, not productivity tools. Network monitoring sees encrypted traffic, not what an AI agent is doing with your credentials.

Organizations average 1,000+ third-party connections they don't know exist. Add autonomous AI agents that create their own connections, store OAuth tokens, and access multiple platforms at once, and these hidden attack vectors multiply fast.

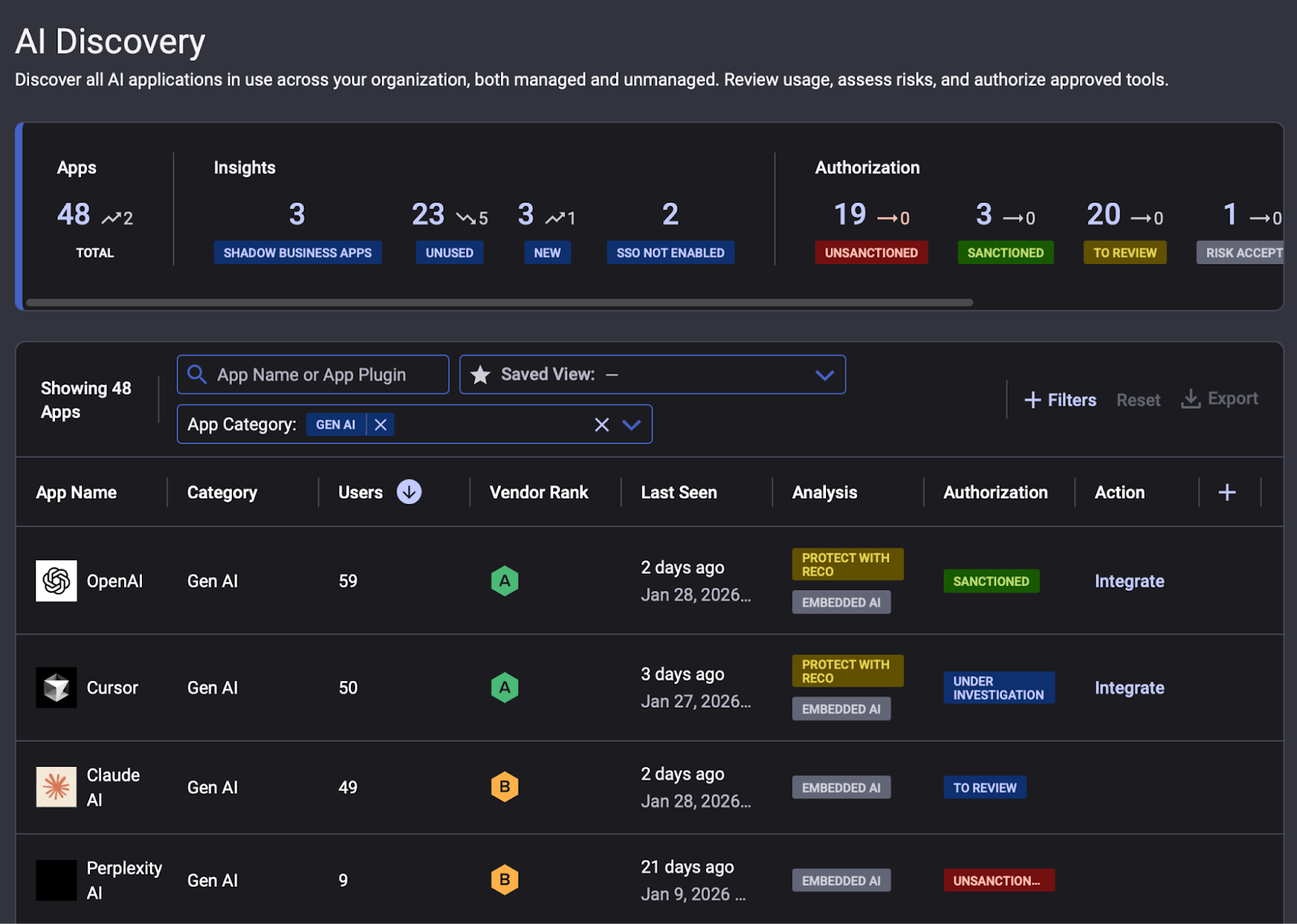

Continuous Shadow AI Discovery

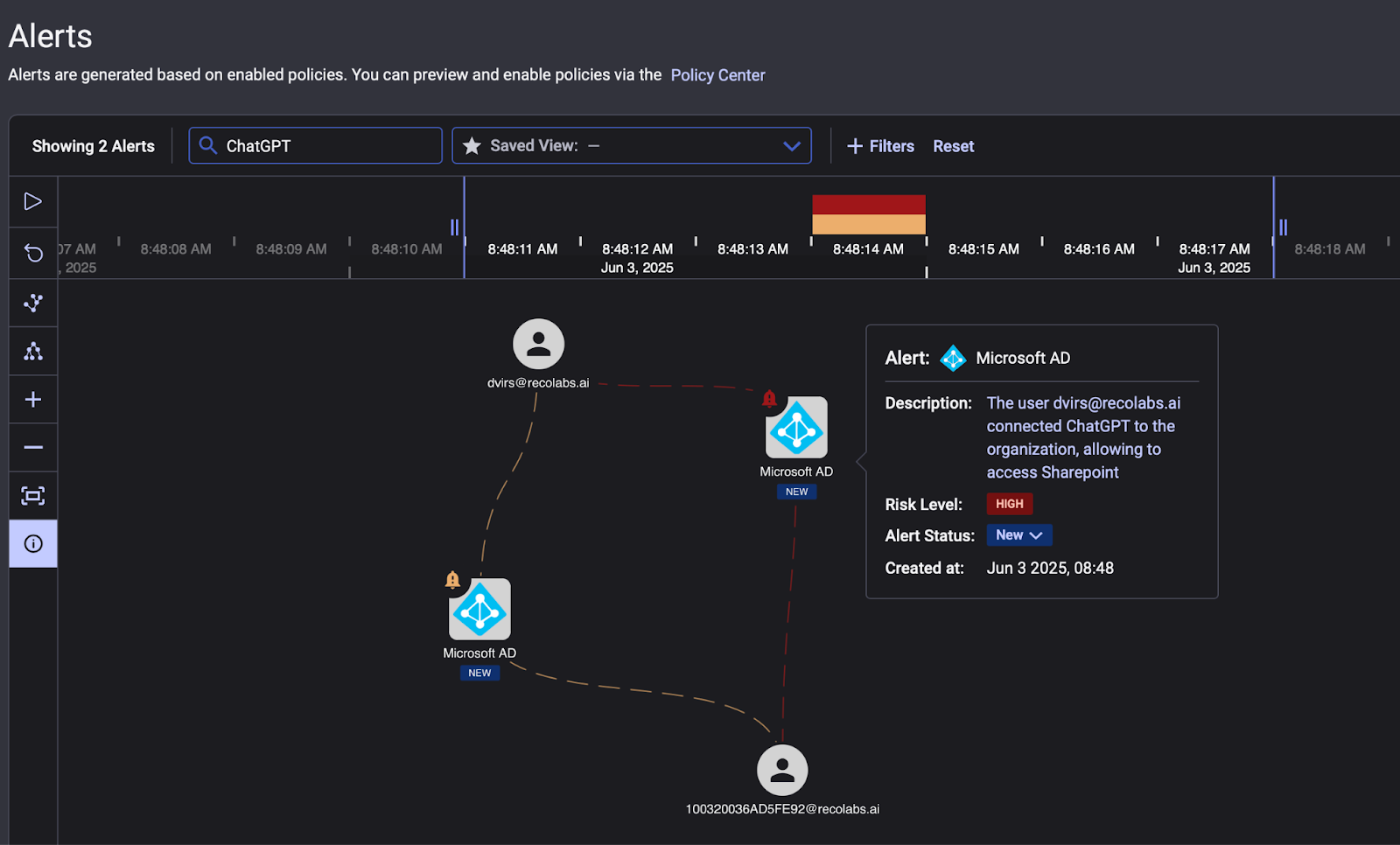

With Reco, you can automatically detect AI tools like Clawdbot accessing your data without IT approval, from personal ChatGPT usage to autonomous agents connecting to corporate systems. When a new AI app appears in your environment, you'll know in seconds, not after the breach.

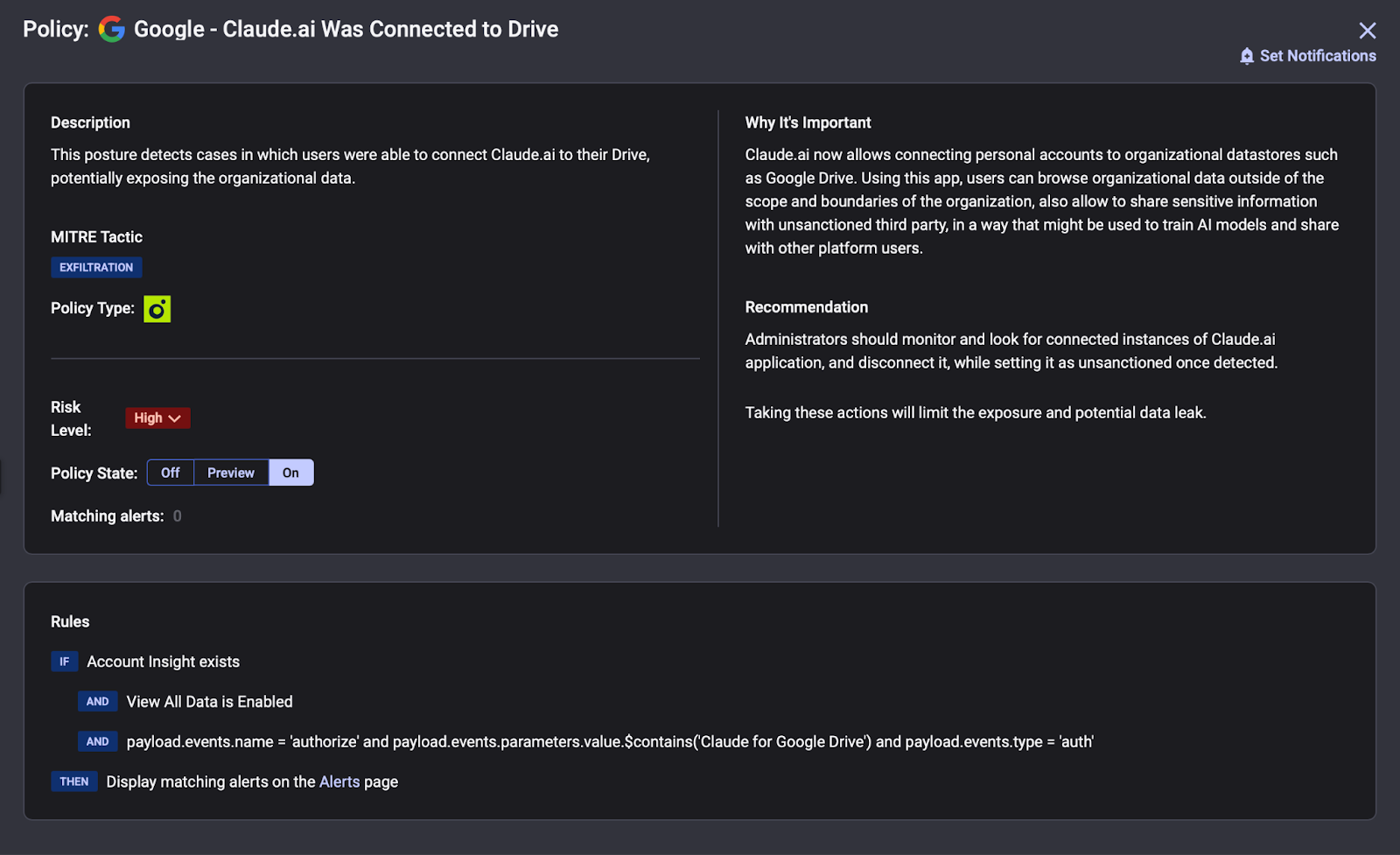

SaaS-to-SaaS Connection Mapping

You can track every OAuth grant, API integration, and third-party connection in real time. When tools like Clawdbot store your Slack tokens or connect to corporate calendars, you'll see exactly what systems they can reach and what data pathways they create.

Identity and Access Governance

You can map every identity to every application and AI agent they access, revealing hidden permissions and risky access patterns. Know when employees grant autonomous agents elevated privileges to sensitive systems, and identify overprovisioned accounts before they become attack vectors.

Threat Detection with Context

With Reco's Knowledge Graph, you can connect user behaviors, SaaS activities, and risk signals into actionable intelligence. For incidents like Clawdbot exposure, get full blast radius analysis: which credentials were accessible, what data flowed through the agent, and where to focus remediation.

The Bigger Picture for Agentic AI

Shadow AI isn't new. Employees have been adopting unsanctioned tools for years, and security teams have learned to deal with data leaking into cloud services they don't control. But Clawdbot shifts the attack surface somewhere harder to reach: personal devices, home networks, machines that never touch your MDM.

Clawdbot's viral moment is a preview of what's coming. As AI assistants evolve from stateless chatbots to persistent autonomous agents, the security model has to evolve too. The questions aren't just "what data are employees pasting into AI tools" anymore. They're "what systems have AI agents been granted access to" and "what actions can they take without human approval."

Recent data shows us that 91% of AI tools operate without IT approval. That statistic is about to get a lot more consequential when those tools aren't just processing prompts. They're executing commands.

You can't govern shadow AI if you don't know it's there, and you can't secure agentic AI if you're still thinking about it as a chatbot. This is why visibility matters.

Reco's shadow AI discovery feature helps security teams identify unsanctioned AI tools across your environment, so you can get ahead of the next Clawdbot before it lands on your network.

See how shadow AI discovery works and get ahead of the risk with Reco.

Gal Nakash

ABOUT THE AUTHOR

Gal is the Cofounder & CPO of Reco. Gal is a former Lieutenant Colonel in the Israeli Prime Minister's Office. He is a tech enthusiast, with a background of Security Researcher and Hacker. Gal has led teams in multiple cybersecurity areas with an expertise in the human element.

Gal is the Cofounder & CPO of Reco. Gal is a former Lieutenant Colonel in the Israeli Prime Minister's Office. He is a tech enthusiast, with a background of Security Researcher and Hacker. Gal has led teams in multiple cybersecurity areas with an expertise in the human element.

%201.svg)

.svg)