OpenClaw: The AI Agent Security Crisis Unfolding Right Now

.png)

You've likely heard about OpenClaw by now. This open-source AI agent has quickly become one of the fastest-growing repositories in GitHub's history, amassing over 135,000 stars within weeks. However, it has also sparked the first major AI agent security crisis of 2026. Reco can help you identify whether it's present in your environment.

The OpenClaw Phenomenon

OpenClaw (previously known as Clawdbot and Moltbot after trademark disputes) is an open-source AI agent created by developer Peter Steinberger. Unlike traditional AI assistants that just answer questions, OpenClaw is autonomous. It can execute shell commands, read and write files, browse the web, send emails, manage calendars, and take actions across your digital life.

Users interact with OpenClaw through messaging platforms like WhatsApp, Slack, Telegram, Discord, and iMessage. The agent runs locally and connects to large language models like Claude or GPT. Its "persistent memory" feature means it remembers context across sessions, learning your preferences and habits over time.

The appeal is clear: an AI assistant that takes action on your behalf. People are buying dedicated hardware just to run OpenClaw around the clock. However, that capability comes with serious consequences, and it didn't take long for them to surface.

A Cascade of Security Failures

Within just two weeks of going viral, OpenClaw was associated with a growing number of security incidents that escalated in both scope and severity. These issues ranged from traditional vulnerabilities to exposed management interfaces and the distribution of malicious skills. Individually, each would be concerning. Taken together, they illustrate why AI agents with broad system access represent a fundamentally new risk category.

January 27-29, 2026 - ClawHavoc

Attackers distributed 335 malicious skills via ClawHub, OpenClaw's public marketplace. These skills used professional documentation and innocuous names like "solana-wallet-tracker" to appear legitimate, then instructed users to run external code that installed keyloggers on Windows or Atomic Stealer malware on macOS. Researchers later confirmed 341 malicious skills total out of 2,857 - meaning roughly 12% of the entire registry was compromised.

January 30, 2026 - A Quiet Patch

OpenClaw released version 2026.1.29, patching CVE-2026-25253 before public disclosure. The vulnerability allowed one-click remote code execution via a malicious link. The vulnerability exploited the Control UI's trust of URL parameters without validation, enabling attackers to hijack instances via cross-site WebSocket hijacking - even those configured to listen only on localhost.

January 31, 2026 - Massive Exposure

Censys identified 21,639 exposed instances publicly accessible on the internet, up from approximately 1,000 just days earlier. The United States had the largest share of exposed deployments, followed by China, where an estimated 30% of instances were running on Alibaba Cloud. Misconfigured instances were found leaking API keys, OAuth tokens, and plaintext credentials.

January 31, 2026 - Moltbook Breach

That same week, Moltbook (a social network built exclusively for OpenClaw agents) was found to have an unsecured database exposing 35,000 email addresses and 1.5 million agent API tokens. The platform, which had grown to over 770,000 active agents, demonstrated how quickly an unvetted ecosystem can compound risk.

February 3, 2026 - Full Disclosure

CVE-2026-25253 was publicly disclosed with a CVSS score of 8.8. The same day, OpenClaw issued three high-impact security advisories: the one-click RCE vulnerability and two command injection vulnerabilities. Security researchers confirmed the attack chain takes "milliseconds" after a victim visits a single malicious webpage.

Why This Matters for Your Organization

These technical issues are alarming on their own, but the deeper issue is what happens when employees connect personal AI tools to corporate systems, often without the security team's visibility.

OpenClaw integrates with email, calendars, documents, and messaging platforms. When connected to corporate SaaS apps, like Slack or Google Workspace, the agent can access Slack messages and files, emails, calendar entries, cloud-stored documents, data from integrated apps, and OAuth tokens that enable lateral movement.

Making matters worse, the agent's persistent memory means any data it accesses remains available across sessions. If the agent is then compromised (through a malicious skill, prompt injection, or vulnerability exploit) attackers inherit all of that access.

This is basically shadow AI with elevated privileges. Employees are granting AI agents access to corporate systems without security team awareness or approval, and the attack surface grows with every new integration.

Identifying OpenClaw in Your Environment

Traditional security tools struggle to detect AI agent activity. Endpoint security sees processes running but doesn't understand agent behavior. Network tools see API calls but can't distinguish legitimate automation from compromise. Identity systems see OAuth grants but don't flag AI agent connections as unusual.

Reco is one of the few SaaS security platforms that can detect OpenClaw integrations across your environment.

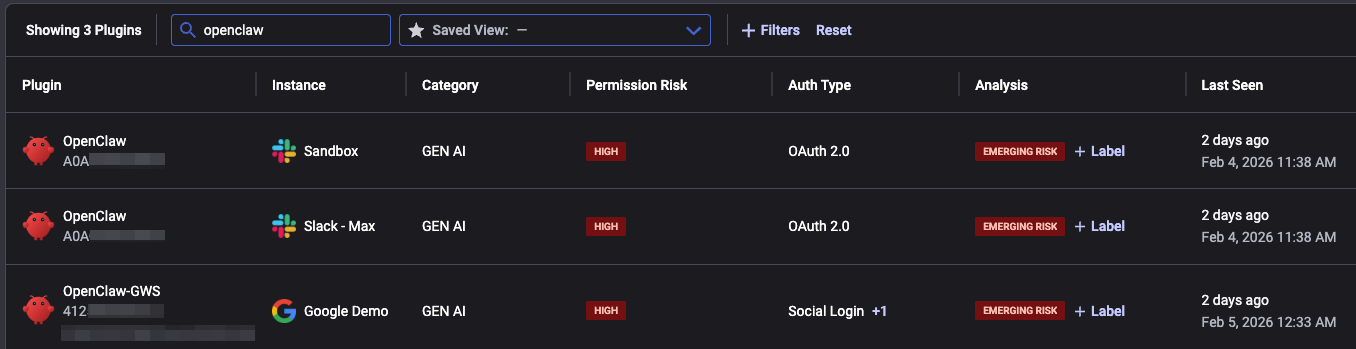

Using Reco's plugin page, you can search for all the plugins that are associated with OpenClaw. As shown below, the relevant apps are marked with an "emerging risk" label whenever the application is associated with recent real-world examples of elevated risk patterns.

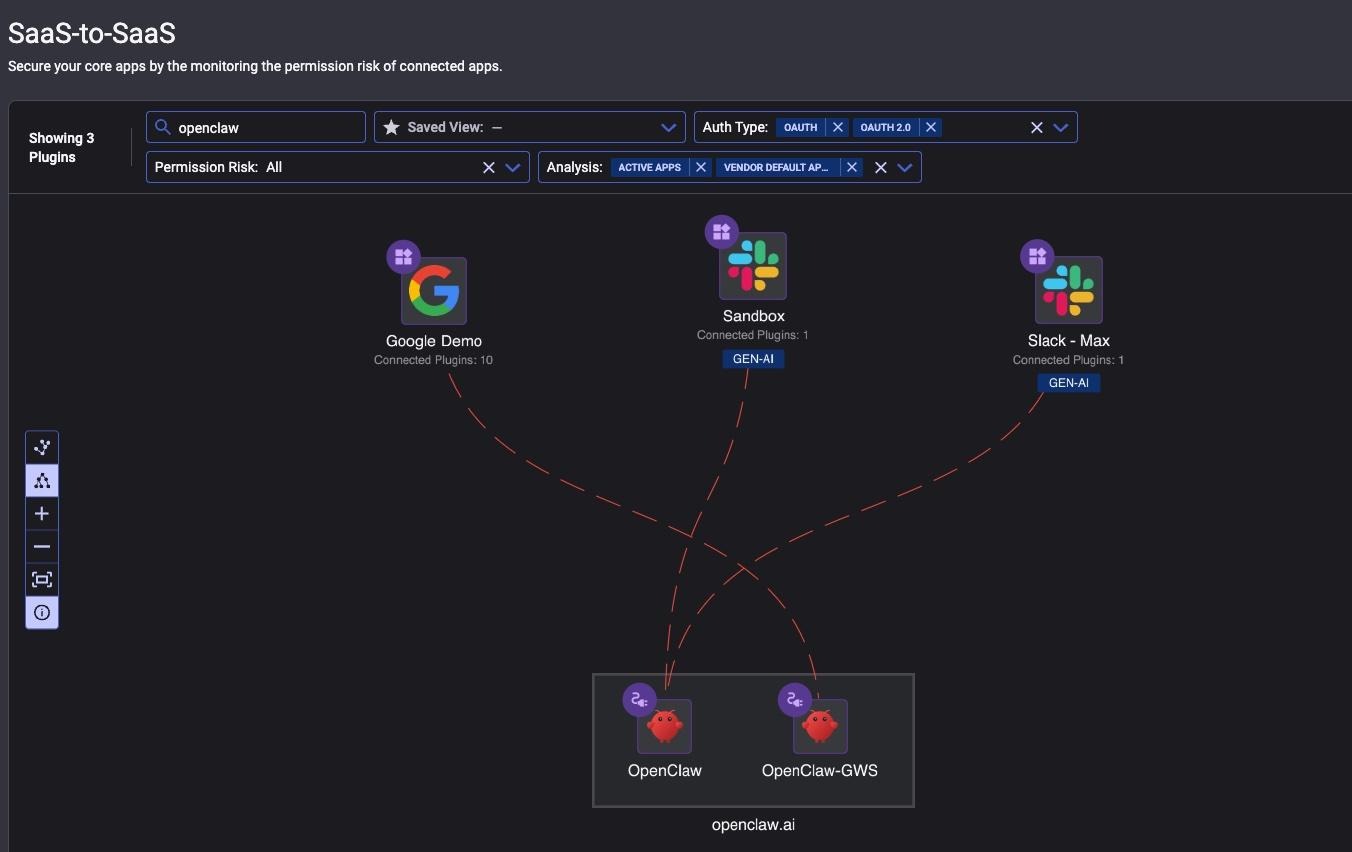

The SaaS-to-SaaS page provides a graph-based visualization of OpenClaw integrations with organizational SaaS applications. In our example, you can see that OpenClaw was used in both Google Workspace and Slack.

By hovering on the dashed line, you can see the exact permissions and roles that were provided to OpenClaw. Reco automatically identifies and flags high-risk permissions, such as gmail.modify, gmail.settings.basic, and gmail.settings.sharing, so security teams can quickly assess which integrations pose the greatest risk to sensitive data:

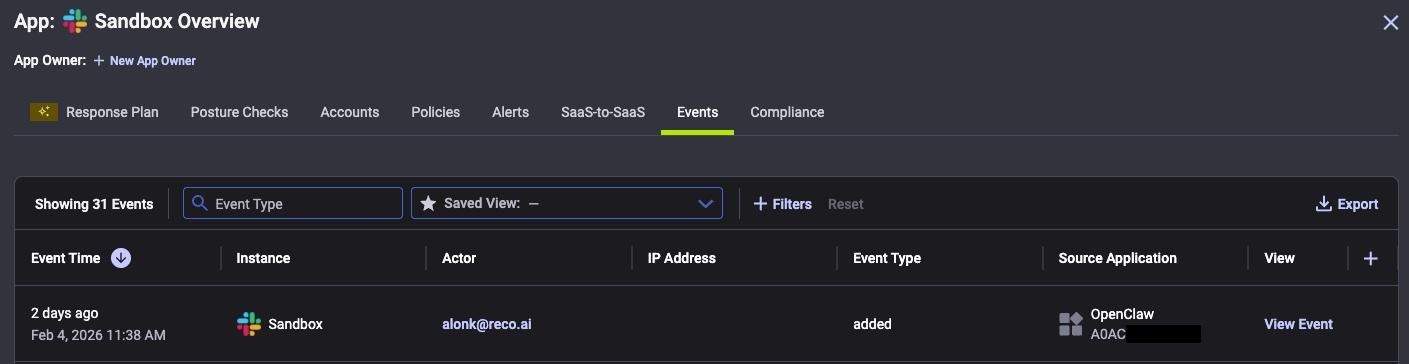

In case you want to get additional details associated with the relevant application, you can use the "events" pane:

This visibility allows security teams to identify AI agent connections, audit which users have granted access, and investigate potential exposure before incidents occur.

During our investigation, the Reco Security Research team also identified a specific user-agent string associated with OpenClaw activity in Slack:

ApiApp/<SlackAppID> @slack:socket-mode/2.0.5 @slack:bolt/4.6.0 @slack:web-api/7.13.0 openclaw/22.22.0 <OS-Identifier>

This user-agent can be used to search Slack access logs directly for OpenClaw connections. The exact string may vary, but it's a useful signal for security teams looking to identify OpenClaw usage across their environment.

The Bigger Picture

OpenClaw is not going away - and even if it does, the demand for autonomous AI agents means another project will take its place.

The productivity benefits are real, and employees will continue adopting these tools regardless of the risks. The security community has called OpenClaw everything from a "security nightmare" to a "dumpster fire," but its 135,000 GitHub stars make one thing clear: users are willing to accept the trade-off.

For security teams, the priority needs to be visibility. You cannot secure what you cannot see. AI agents represent a new category of application that demands new approaches to detection and monitoring - and waiting for the next incident is not a strategy.

Contact us today to see if OpenClaw is already connected to your environment: https://www.reco.ai/demo-request.

Alon Klayman

ABOUT THE AUTHOR

Alon Klayman is a seasoned Security Researcher with a decade of experience in cybersecurity and IT. He specializes in cloud and SaaS security, threat research, incident response, and threat hunting, with a strong focus on Azure and Microsoft 365 security threats and attack techniques. He currently serves as a Senior Security Researcher at Reco. Throughout his career, Alon has held key roles including DFIR Team Leader, Security Research Tech Lead, penetration tester, and cybersecurity consultant. He is also a DEF CON speaker and holds several advanced certifications, including GCFA, GNFA, CARTP, CESP, and CRTP.

Alon Klayman is a seasoned Security Researcher with a decade of experience in cybersecurity and IT. He specializes in cloud and SaaS security, threat research, incident response, and threat hunting, with a strong focus on Azure and Microsoft 365 security threats and attack techniques. He currently serves as a Senior Security Researcher at Reco. Throughout his career, Alon has held key roles including DFIR Team Leader, Security Research Tech Lead, penetration tester, and cybersecurity consultant. He is also a DEF CON speaker and holds several advanced certifications, including GCFA, GNFA, CARTP, CESP, and CRTP.

%201.svg)

.svg)