From Shadow IT to Shadow AI: The Next Enterprise Risk

Why Shadow AI Is Emerging Faster Than Shadow IT Ever Did

Shadow AI is spreading through enterprises at a pace that earlier generations of Shadow IT never reached. Employees can access AI models directly within the tools they already use, which removes the friction that once slowed the adoption of unapproved apps. NIST notes in its AI Risk Management Framework that generative AI introduces new patterns of informal use that emerge more rapidly than organizations can document or govern.

The result is an environment where prompt-driven actions, embedded copilots, and personal AI accounts drive rapid growth in unmonitored activity. This speed creates challenges for security teams who cannot rely on traditional discovery methods to detect or understand how AI models are being used across the organization.

Shadow IT vs. Shadow AI: Key Differences

Before exploring the risks in depth, it is important to establish the differences between these two categories of unsanctioned activity. The following table outlines the structural, behavioral, and security characteristics that separate modern Shadow AI from traditional Shadow IT:

Business and Security Risks Introduced by Shadow AI

Shadow AI amplifies enterprise exposure because AI tools influence decisions, handle sensitive content, and operate in places security teams cannot easily observe. These risks appear across environments where employees rely on public or unapproved models.

- Permanent Data Exposure and IP Leakage: Employees paste source code, internal documents, HR files, and customer information into public AI tools that may retain or reuse data. This creates lasting exposure and weakens control over intellectual property, especially when prompts involve unreviewed personal accounts.

- Regulatory and Compliance Violations at Scale: Sending personal or confidential data to third-party models can violate GDPR, HIPAA, and contractual obligations. Your sources note that Shadow AI often appears in HR and legal workflows, where unapproved tools handle regulated content without formal checks.

- Hallucinated Outputs and Decision-Making Failures: AI-generated outputs may be inaccurate or biased, yet employees frequently accept them without validation. This leads to flawed code, incorrect analysis, or operational decisions that propagate errors into production and customer-facing environments.

- Reputational Damage From Unvetted AI Usage: When employees rely on external AI tools informally, incorrect outputs, exposed data, or biased results can reach clients or partners. These incidents erode trust and create public relations issues once it becomes clear that the organization lacked oversight of AI-driven work.

Mapping the Shadow AI Adoption Curve in the Enterprise

Shadow AI appears in consistent behavioral patterns as employees move from light experimentation to full reliance on unapproved models. These stages develop faster than traditional governance structures, which leaves security teams unable to track how prompts, models, and AI assistants enter daily work.

Stage 1: Curiosity and Individual Exploration

Employees begin experimenting with AI tools to summarise content, generate drafts, troubleshoot code, or test ideas. These trials usually occur through personal accounts with no monitoring or approval. Research from ISACA’s 2025 analysis of Shadow AI confirms that many organizations first encounter AI usage through informal, individual experimentation that happens long before governance practices are in place.

Stage 2: Workflow Embedding Without Approval

As confidence grows, employees weave AI into recurring tasks, such as drafting responses, analyzing documents, testing queries, or running small automations. This stage mirrors what your sources describe as the governance drift zone, where formal policies exist, but employee behavior moves faster than enforcement. AI becomes part of day-to-day operations, yet the organization still lacks visibility into what data employees share with public models.

Stage 3: Organizational Dependence and Risk Blindness

Eventually, unapproved AI becomes essential to team productivity. Shadow AI-driven processes expand across customer support, engineering, sales, HR, and operations. At this point, employees trust AI outputs enough to let them influence code, analysis, and decisions without validation. The organization now relies on workflows it never approved, cannot audit, and cannot recreate if a model changes, becomes restricted, or fails. This creates operational fragility and deep blind spots for IT and security teams.

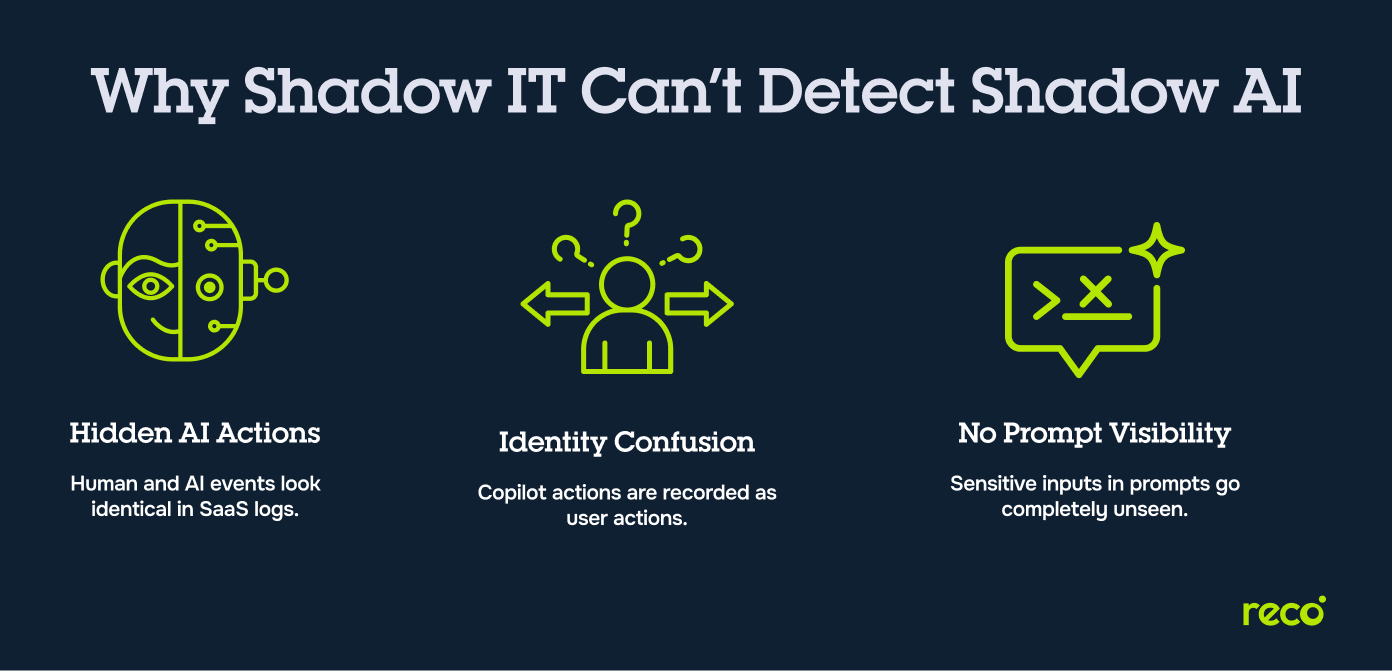

Why Traditional Shadow IT Controls Aren’t Enough to Catch Shadow AI

Traditional Shadow IT controls were built to detect unapproved apps, not invisible model interactions. AI activity blends into normal user behavior, and most SaaS platforms do not expose prompt-level actions or separate human and model-generated events. This creates gaps that leave security teams without a reliable view of how employees interact with AI inside their daily tools.

- SaaS Logging Blind Spots Around AI Copilot Actions: Most SaaS platforms do not separate human activity from model activity, so prompts, generated content, and automated actions appear as standard user events. This makes AI usage invisible to discovery tools that rely on login events, file movements, or API calls.

- Identity to Action Disconnect in AI Usage: AI copilots often perform actions on behalf of users, but logs record these actions as if they were human-initiated. This disconnect hides who actually created a file, generated code, posted content, or triggered an automation, which prevents accurate auditing and incident reconstruction.

- Inability to Classify AI Prompts or Monitor Sensitive Inputs: Traditional controls focus on file uploads, API calls, or data transfers. They cannot detect when employees paste source code, HR data, legal files, or customer content into an AI prompt. Without prompt visibility, security teams cannot classify the data entering AI models or assess compliance risk.

Governance and Access Control Gaps Created by Shadow AI

Shadow AI introduces governance challenges that traditional access rules cannot address. AI models process prompts, generate actions, and influence decisions in ways that do not align with existing identity, policy, or monitoring structures. This creates blind spots that weaken control across the organization.

Lack of Enforceable Policies for Public AI Tools

Most organizations have guidelines for SaaS adoption, but these policies do not translate to public AI platforms that employees access with personal accounts. Even when rules exist, enforcement is limited because security teams cannot see which models employees use, what data they share, or how often they rely on AI to complete work. This gap grows as AI becomes embedded in common productivity tools.

No Oversight of Prompt Content or Copilot Decisions

AI copilots generate text, code, analysis, and workflow actions, yet most platforms do not provide visibility into the underlying prompts or reasoning paths that led to those outputs. This lack of transparency is consistent with findings from ENISA’s research on AI system audits, which highlights the difficulty of reviewing model-generated behavior and understanding decisions made without direct human input.

Inability to Map AI Interactions to Risk Levels or Data Classifications

Standard access controls rely on known data flows and predictable user actions. AI disrupts this model because prompts may contain source code, HR records, legal documents, or sensitive files that do not pass through traditional classification tools. Without prompt level visibility, security teams cannot assign risk levels, identify regulated content, or align AI interactions with internal data handling requirements.

Mitigation Strategies for Shadow AI in Enterprise Environments

Enterprises can reduce Shadow AI exposure by combining policy clarity, employee training, controlled enablement, and continuous monitoring. The strategies below focus on building structure without slowing productivity:

How Reco Delivers Unified Access Intelligence for Shadow AI

Reco helps organizations understand how employees interact with SaaS applications by correlating user identities, permissions, configuration settings, shared resources, and behavioral patterns. These capabilities extend to Shadow AI because many AI tools sit inside SaaS platforms or appear through user-driven activity that Reco already monitors.

- Real-Time Discovery of Shadow AI Usage Across SaaS Applications: Reco identifies unapproved or unknown SaaS applications, including AI tools and AI-enabled features, by analyzing user actions and app connections across the environment. This helps organizations see when employees introduce new AI applications or interact with AI-driven assistants inside the SaaS stack.

- Monitoring Sensitive Data Shared With AI Tools in Context: Reco detects data exposure risks within SaaS platforms by examining shared files, resource access, permissions, and unusual behavior. When employees use AI features inside these platforms, Reco can highlight when sensitive data is involved, based on identities, access rights, and the context of the interaction.

- Detecting Unapproved AI Prompts Based on Identity and Role: Reco maps every action to a verified identity and evaluates it against the user’s permissions and past behavior. When AI-related activity occurs inside supported SaaS applications, Reco can flag actions that do not align with the user’s role, established policies, or expected access patterns.

- Integrating AI Activity Insights Into SIEM, DLP, and IR Workflows: Reco integrates with existing security workflows by sending identity-centric insights and SaaS activity signals into SIEM, SOAR, and DLP solutions. This allows security teams to incorporate AI-related behavior detected within SaaS platforms into their investigation, incident response, and policy enforcement processes.

Conclusion

There is no doubt that Shadow AI has moved from occasional experimentation to a steady presence in everyday work. A 2025 report from McKinsey and Company found that 88% of organizations now use AI in at least one business function, yet most are still early in building mature governance practices. This gap between enthusiasm and oversight shows why clear policies, meaningful visibility, and thoughtful access controls matter. When employees understand how to use AI safely, and leaders recognise where it appears in daily workflows, organizations can support innovation while protecting what matters. Acting early creates a safer path forward.

How can security teams detect shadow AI tools used within SaaS platforms like Slack or Google Workspace?

Detection works best when teams combine identity visibility with SaaS activity signals rather than relying on network blocks alone.

- Monitor OAuth grants to uncover AI tools connected to personal or non-approved accounts.

- Review app usage metadata inside Slack, Google Workspace, Notion, and M365 to identify AI-generated events blended with user actions.

- Correlate identity behavior across SaaS apps to flag anomalous actions consistent with AI assistance.

- Integrate SaaS logs into SIEM tools to detect unusual sequences such as rapid content generation or repeated summarisation tasks.

What are the legal and compliance risks of employees using public AI tools without approval?

Unapproved AI usage exposes organizations to multiple legal and regulatory concerns.

- Sharing internal or customer data with public AI models may breach GDPR, HIPAA, PCI, or data residency obligations.

- Terms of service for public AI tools often allow retained training or logging, which can compromise confidentiality and IP protection.

- Cross-border model routing may violate contractual data handling requirements.

- AI-generated outputs can create copyright exposure or propagate biased or discriminatory decisions that lead to complaints or regulatory escalation.

Why is shadow AI harder to govern than traditional shadow IT?

Shadow AI introduces risks that fall outside the scope of traditional SaaS discovery and access controls.

- AI actions are embedded inside tools employees already use, so the activity appears as normal user behavior.

- Prompt inputs and model-generated outputs are rarely logged, making visibility harder than tracking app logins or file uploads.

- AI agents may perform actions on behalf of users, reducing clarity around identity-to-action mapping.

- Data classification controls cannot detect sensitive information pasted into prompts or fed into copilots.

- Governance frameworks lag because AI tools evolve faster than policy cycles.

How does Reco identify AI Copilot usage embedded in tools like Notion, Slack, or M365?

Reco correlates identity data, permissions, SaaS activity patterns, and unusual behavior across integrated applications. When AI assistance appears inside platforms such as Notion, Slack, or M365, Reco highlights activity patterns that do not align with a user’s typical workflow, role, or access history. This helps security teams understand where AI interaction may be occurring, even when the platform does not provide explicit prompt-level logs.

Can Reco enforce data policies based on prompt content and user role?

Reco can enforce identity-aware and role-based policies by monitoring actions within supported SaaS applications and flagging behavior that does not match permissions, expected usage patterns, or data access rules. While Reco does not inspect the internal content of AI prompts directly, it detects the surrounding context, associated data handling, and the user’s identity footprint to help enforce safe AI usage.

Gal Nakash

ABOUT THE AUTHOR

Gal is the Cofounder & CPO of Reco. Gal is a former Lieutenant Colonel in the Israeli Prime Minister's Office. He is a tech enthusiast, with a background of Security Researcher and Hacker. Gal has led teams in multiple cybersecurity areas with an expertise in the human element.

Gal is the Cofounder & CPO of Reco. Gal is a former Lieutenant Colonel in the Israeli Prime Minister's Office. He is a tech enthusiast, with a background of Security Researcher and Hacker. Gal has led teams in multiple cybersecurity areas with an expertise in the human element.

%201.svg)

.png)

.svg)