Your company probably blocked ChatGPT sometime in 2023. Maybe you added it to the firewall. Maybe you sent a strongly-worded email. Maybe you did both.

It didn't work.

New data from Reco's 2025 Shadow AI Report shows 71% of knowledge workers still use AI tools without IT approval. The tools just changed. Instead of ChatGPT, employees shifted to Otter.ai, Claude, Perplexity, and dozens of specialized AI apps that fly under the radar.

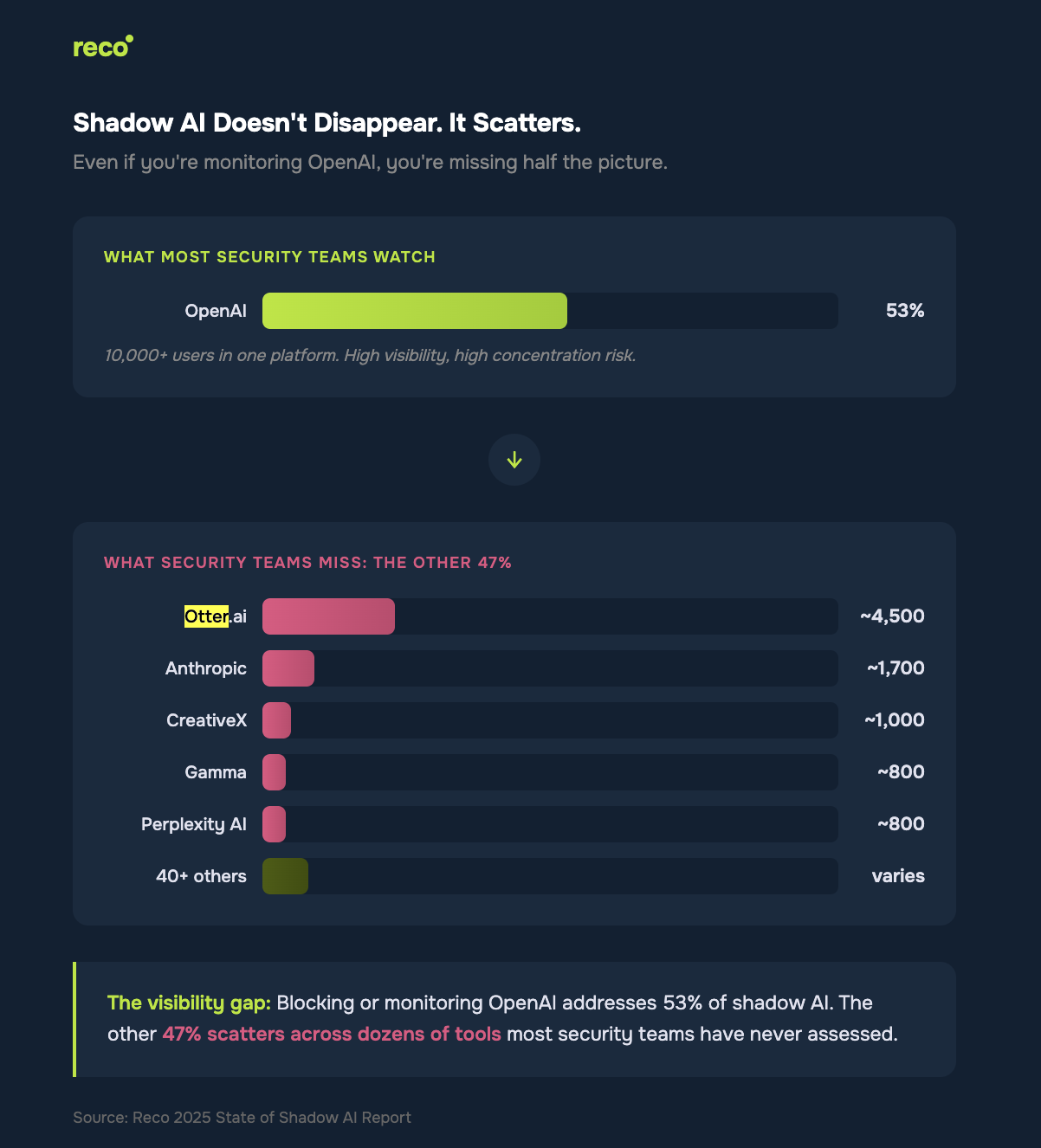

When you block one AI tool, employees don't stop using AI. They find alternatives. Reco tracked shadow AI usage across their enterprise customer base and found OpenAI still commands 53% of all shadow AI activity, with over 10,000 enterprise users in their study alone.

But here's what's interesting: the remaining 47% is fragmented across dozens of tools that most security teams have never heard of. Otter.ai has ~4,500 users. Anthropic has ~1,700. Then there's CreativeX, Gamma, Perplexity AI, Valence Chat Bot, Blip, Synthesia, and a long tail of specialized tools.

Each new app is a new OAuth connection. A new data flow. A new vendor you haven't assessed.

The most surprising finding: shadow AI tools don't disappear after initial experimentation. They become infrastructure.

Reco found some applications running unsanctioned for over 400 days on average. CreativeX: 403 days. System.com: 401 days. Blip: 203 days.

These aren't employees "trying something out." These are tools embedded in daily workflows, processing sensitive data for over a year without security oversight.

The report labels an application as "stopped" only after 60 days of no access or activity. Most shadow AI never stops.

Once employees depend on a tool for 100+ days, removing it means disrupting their work. They've built processes around it. They've uploaded client data to it. They've connected it to other systems. The security debt compounds daily.

You'd expect large enterprises with loose controls to have the worst shadow AI problems. The data shows the opposite.

The pattern makes sense. Small companies have the agility to adopt new tools quickly and the least security staff to monitor them. They face enterprise-level compliance requirements with startup-level security resources.

Not all shadow AI is equally dangerous. Reco scored AI applications across 20 security indicators: encryption, MFA, audit logging, data retention policies, SSO support, and more.

The F-rated tools have no encryption at rest, no MFA, no audit logging, and no compliance certifications. They're processing corporate data daily.

The uncomfortable finding: the most popular tools aren't the most secure. CreativeX and Otter.ai have thousands of users despite security scores that should disqualify them from enterprise use. Employees choose AI tools like consumer apps: features first, security never.

Blocking doesn't work because it treats shadow AI as a technology problem. It's a behavior problem.

Employees use shadow AI because it makes them more productive. The report notes: "The employees creating these risks are driving unprecedented productivity gains. They're not reckless; they're resourceful."

What works instead:

Visibility first. You can't govern what you can't see. Reco found one customer, Wellstar Health System, expected "several hundred apps" in their environment. They discovered over 1,100. Most organizations underestimate their shadow AI footprint by 3-4x.

Pre-approved alternatives. Publish a list of vetted AI tools that meet security requirements. Employees want to do the right thing. They just don't know what "right" looks like.

Risk-based prioritization. Focus on tools accessing email, processing customer data, or generating code first. Let the productivity tools that pose minimal security threats slide temporarily.

Time-based audits. Any AI tool showing 60+ days of usage has become embedded. Those need formal assessment within 30 days: approve with controls or migrate to secure alternatives.

IBM's Cost of a Data Breach Report 2025 found that breaches involving shadow AI cost organizations an extra $670,000 on average. Twenty percent of enterprises have already experienced data leaks from shadow AI tools. Another 20% of organizations in IBM's study suffered breaches specifically due to shadow AI security incidents.

The 71% using AI without approval aren't going to stop. The question is whether you'll discover what they're using through a security incident or through proactive visibility.

Blocking ChatGPT was the easy decision. The hard work is everything that came after.

Gal is the Cofounder & CPO of Reco. Gal is a former Lieutenant Colonel in the Israeli Prime Minister's Office. He is a tech enthusiast, with a background of Security Researcher and Hacker. Gal has led teams in multiple cybersecurity areas with an expertise in the human element.