AI has increasingly become a core feature in Salesforce offerings, including Einstein, GPT-powered tools, and automation workflows. For admins and architects alike, there's an emphasis on seeing how to manage and govern these capabilities. To be more specific, the controls must be in place, proper data handling must be agreed upon, and AI tools must be used in a way that fits the overarching business goals so they don't create issues themselves.

This article is all about the chief concerns in Salesforce AI governance that the admin and architect have to address. This guide covers responsible data usage, transparency, model lifecycle management, and access controls, along with practical tips and code samples.

Salesforce includes AI in different ways: predictive scoring, GPT-based assistants, workflow automation, and more. These tools depend on your data, user inputs, and often third-party models. Governance starts by understanding where AI is being used and how it works.

As an admin or architect, you must know which features are enabled, what data they use, and what output they generate. This helps prevent unexpected behavior or biased decisions. While Salesforce’s Einstein Trust Layer provides built-in measures like data masking, grounding, audit logs, and zero-data retention, the ultimate responsibility for AI governance remains with your organization.

To list all enabled Einstein features, you can run this simple query in the Developer Console:

// List active Einstein features in the Org

List<AIApplication> enabledApps = [SELECT Id, Name, Status FROM AIApplication WHERE Status = 'Active'];

System.debug(enabledApps);

Explanation: This Apex snippet queries all active AI applications in your org so you can review what's live.

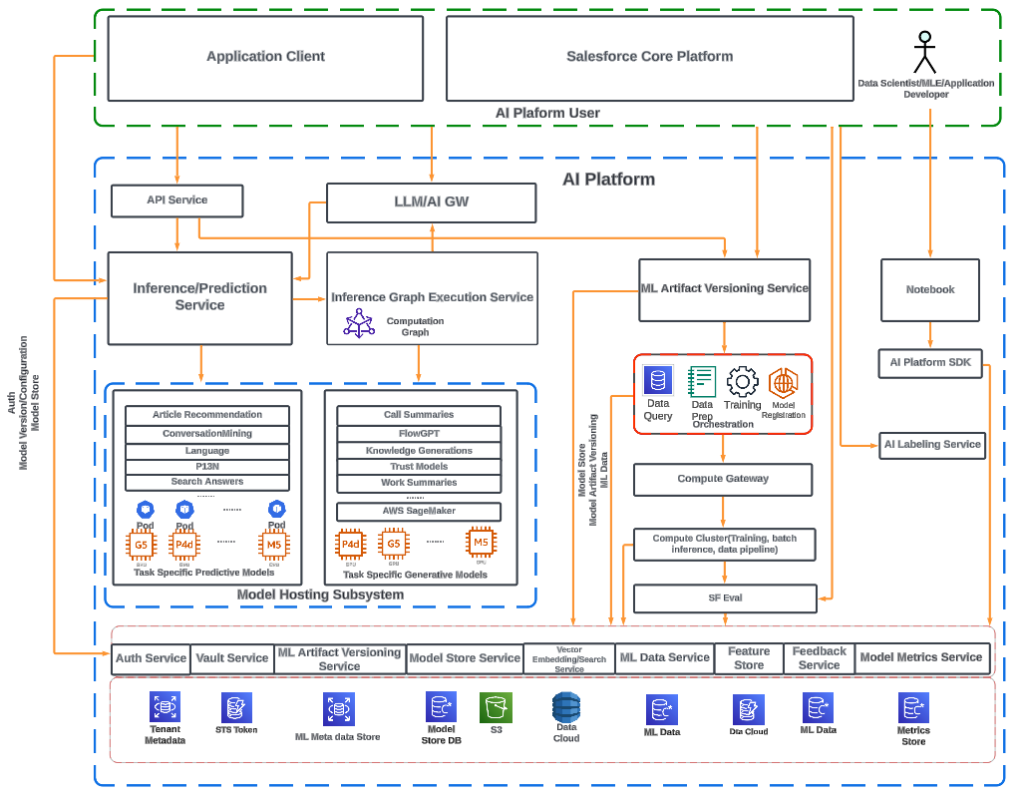

The Salesforce AI platform integrates Einstein intelligence, GPT capabilities, and workflow automation under a unified system. It emphasizes trusted AI deployment, data governance, and seamless alignment with business processes.

Data comprises the substances behind all AI functionalities. Get a grip on which data is used, its storage, and who is permitted access to it. Structured data refers to leads and opportunities, while unstructured data includes chat transcripts or case descriptions.

Salesforce indeed provides FLS, permissions on particular objects, as well as sharing rules to restrict access. Alongside compliance reasons, you should also use Data Classification metadata to tag fields tagged with PII, PHI, or sensitive fields so that control can be maintained on what goes into the AI features.

You can set data classification using Metadata API or Setup UI. Here’s an example using the Tooling API and a simple curl request:

curl https://yourInstance.salesforce.com/services/data/v56.0/tooling/sobjects/FieldDefinition/fieldId \

-H "Authorization: Bearer <access_token>" \

-H "Content-Type: application/json" \

-X PATCH \

-d '{ "DataSensitivityLevel": "Confidential" }'

Explanation: This sets a field’s sensitivity level to “Confidential,” so it can be excluded from AI features that rely on unclassified data.

As a best practice, always create field-level policies for sensitive data before enabling AI features.

Admins and architects need to understand how AI decisions are made. Salesforce includes some explainability features in Einstein, like scoring factors in Opportunity Scoring. However, these are often limited.

Whenever AI models are used in business-critical processes (like loan approvals or lead prioritization), there should be clear documentation or UI elements showing how the prediction was made. You can surface model metadata using Custom Metadata Types or Lightning Web Components.

Example: Showing a model’s confidence score in a custom component.

public class PredictionService {

public static Decimal getPredictionConfidence(Id recordId) {

AIInsight aiResult = [SELECT Confidence FROM AIInsight WHERE RecordId = :recordId LIMIT 1];

return aiResult.Confidence;

}

}

This Apex class fetches a confidence score stored in an AIInsight custom object. You can use this in a Lightning component to explain prediction reliability.

Also, build internal dashboards tracking AI usage, failed predictions, and data drifts. This allows you to monitor model health and retrain as needed.

Because Salesforce doesn’t provide built-in bias detection yet, admins and architects should export model outputs periodically and analyze them for bias, as Salesforce does offer filtering via Dataflow and Discovery to rebalance training data.

Steps to reduce bias:

Use Dataflow and Recipe in Einstein Discovery to apply filters and balance your training data.

AI governance is not a one-time setup. It needs to be part of your release lifecycle. You must track model versions, who deployed them, and under what conditions.

Salesforce AI platform uses a CI/CD pipeline to manage continuous integration and deployment of AI models and metadata. It enables controlled rollouts, version tracking, and governance throughout the AI lifecycle.

Use Salesforce DevOps Center or CI/CD pipelines with source control for model deployment metadata. For example, you can check model configs in Git along with their explanations and audit logs.

Use Change Data Capture (CDC) to track changes in AI model settings:

trigger TrackAIModelChanges on AIApplication (after update) {

for (AIApplication app : Trigger.new) {

if (app.ModelVersion__c != Trigger.oldMap.get(app.Id).ModelVersion__c) {

System.debug('Model version updated for ' + app.Name);

}

}

}

This tracks any update to the model version and can be extended to notify responsible admins.

AI features in Salesforce can bring enormous power with responsibility. As an admin or architect, you share accountability to ensure that these features are used correctly in any given situation. Governance does not simply mean turning off unnecessary features; it also involves managing data, setting access patterns, explaining outcomes that occur, and critically reviewing the model behavior through time. Do follow standard Salesforce AI best practices, but construct your own safeguards wherever they are needed. Given the rapid evolution of AI in Salesforce, retaining control of its usage will be most important to sustain success.